Konrad Zuse Internet Archive

Who was Konrad Zuse?

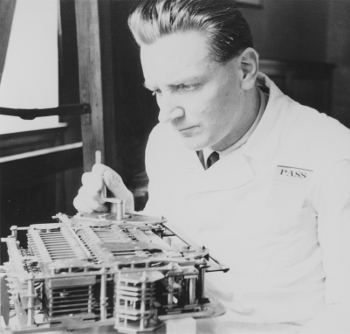

Konrad Zuse (1910-1995) was a computer pioneer who built one of the first program-controlled computing machines. Between 1936 and 1945 he built his first four computing machines - the Z1, Z2, Z3 and Z4. In 1945 he moved to Bavaria where he continued to construct computing machines with his new company. There he also designed one of the first high-level programming languages - the so-called Plankalkül. Learn more about Konrad Zuse and his machines in the Encyclopedia (please see menu above) or read essays about him.

What is the Konrad Zuse Internet Archive?

This online archive offers access to the digitized original documents of the private papers of Konrad Zuse. These documents include technical drawings, photographs, manuscripts, typescripts and various other documents, some of which are written in shorthand. The originals and the master copies of the digitized images are stored at the archives of Deutsches Museum. Learn more about the project.

How was the Konrad Zuse Internet Archive implemented?

The Konrad Zuse Internet Archive is based on the open source repository software imeji. Compared to a regular website a repository can also manage metadata in addition to the images. Metadata are information about the images describing them and their contents granting a better understanding of the presented object. imeji is developed by the Max Planck Digital Library and the Konrad Internet Archive Project is contributing to its development in order to create a general solution for projects that want to publish images online. Learn more about imeji.

supported by | in cooperation with |

powered by | utilizing digilib |

in cooperation with |