Supercomputers at ZIB: Past to Present

Supercomputing at ZIB started in 1984 with the installation of the legend-ary Cray 1M system in Berlin. But it is not the hardware resources alone which make supercomputing feasible for various disciplines in sciences, engineering and other areas. The idea of HPC software consultants was born at ZIB and quickly taken up by other centers as well. Today, the HPC consultants at ZIB pursue their own domain-specific research in fields such as chemistry, engineering, earth sciences or bioinformatics while providing consultancy to external users of the supercomputers. Our basic principle “Fast Algorithms – Fast Computers”, which was coined almost thirty years ago holds until today.

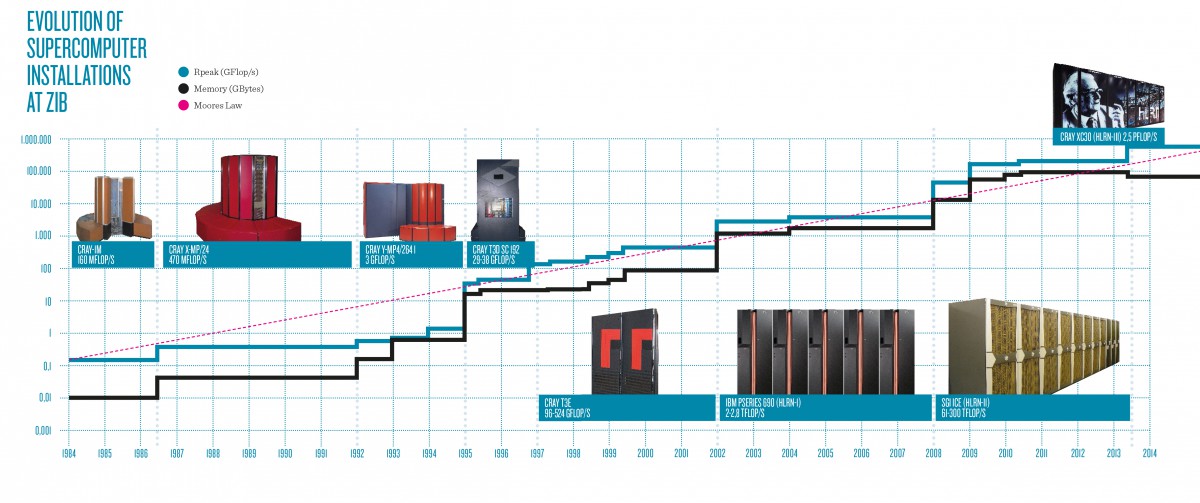

Supercomputer Installations at ZIB

The story of supercomputer installations at ZIB started in 1984. All systems up to the Cray T3E were financed solely by the State of Berlin, while the systems after 2002 were jointly financed by the HLRN member states and operated at the two sites ZIB/Berlin and LUIS/Hanover. The systems’ peak performance (blue curve) and memory capacity (black curve) increased as compared to Moore’s law (dashed line) shown in the Fig. below. Over the years, ZIB regularly made a system listed in the TOP500 list—despite the fact, that we did not focus on peak performance but rather on having well-balanced systems with powerful interconnects and sufficiently large main memories. Moreover, the supercomputers operated at ZIB do not comprise single monolithic systems, but rather a number of system components with complementary architectures for massively parallel processing (MPP) and shared-memory processing (SMP). The driving force on the selection of our system architectures has, of course, always been the user demand. Apart from the high investment costs, the sharp increase in the operation costs became of major burden for HPC service providers.Today, Germany is probably among the worst of all European countries with respect to energy prices. From 2013 this financial burden could no longer be carriedby the two HLRN sites in Berlin and Hanover. Consequently the HLRN board decided to jointly finance the costs for system maintenance and energy, starting with the HLRN-III in 2013.

THE HLRN-III PROCUREMENT: AN OPTIMIZATION PROBLEM

With the long and time-consuming application process for HPC resourcesin Germany, the HLRN consortium started the planning process for the HLRN-III in 2009/2010, shortly after the installation of the predecessor system. In spring 2011, the HLRN-III-proposal was submitted to the WR and DFG. After their positive decision, the HLRN alliance mandated ZIB to coordinate the Europe-wide procurement. All relevant HPC vendors were asked to participate. After several negotiation rounds Cray’s bid was formally accepted and the competing bidders were informed. The contract was signed in December 2012 and the system was delivered in August 2013. From then everything progressed very fast: Cray needed only a little more than one week to install the XC30 from scratch at our premises. More time-consuming was the software installation on the various support servers (login, batch, data mover,firewalls, monitoring, accounting, etc.). Because of the complex dependencies of these servers, this difficult part of the system installation is most often underestimated. This is especially true for the HLRN system with its unique “single-system view” that provides a single user login, project management, and accounting for a system that is operated at two sites in Berlin and Hanover.

HOW TO SELECT THE RIGHT SUPERCOMPUTER?

This section is a short excursion on how we selected our HLRN-III supercomputer. Finding the perfect system that matches the requirements of their users, administrators and – last but not least – the capabilities of the providers, is far from an easy task. And yes – we found an almost “perfect” system architecture …

We received proposals from many well-known HPC vendors on our European procurement (RFP). The selection process was primarily guided by the performance results of the HLRN-III Benchmark Suite. This benchmark suite aims to rate the overall compute and I/O performance of the two-site HLRN-III configuration over the operating time with two installation phases. Thus, the idea is to value the performance of different HLRN-III configurations during the negotiation stage at the end by a single score. The HLRN-III benchmark comprises the application benchmark as its major part and is complemented by an I/O benchmark section and the HPCC benchmark to gain insight into low-level characteristics of the system architecture. Each of these benchmark sections are differently weighted with the major impact by results of the application benchmark.

APPLICATION BENCHMARK

The HLRN-III has to serve a broad range of HPC applications across various scientific disciplines in the North-German science community. Therefore, and not for the first time, our RFP and subsequent decision-making process was based on a balanced set of important applications running on HLRN resources. The balance here refers to cover a representative spectrum of traditional and foreseeable major applications across the HLRN users community. The application benchmark is composed of eight program packages: BQCD, CP2K, PALM suite, OpenFOAM, NEMO, FESOM, and FRESCO. Workloads for the benchmark suite are defined by the HLRN benchmark team in close cooperation with major stakeholders from the different scientific fields. Reference benchmark results were obtained on the HLRN-II configuration with representative input data sets and possibly utilizing large portions of the HLRN-II resources.

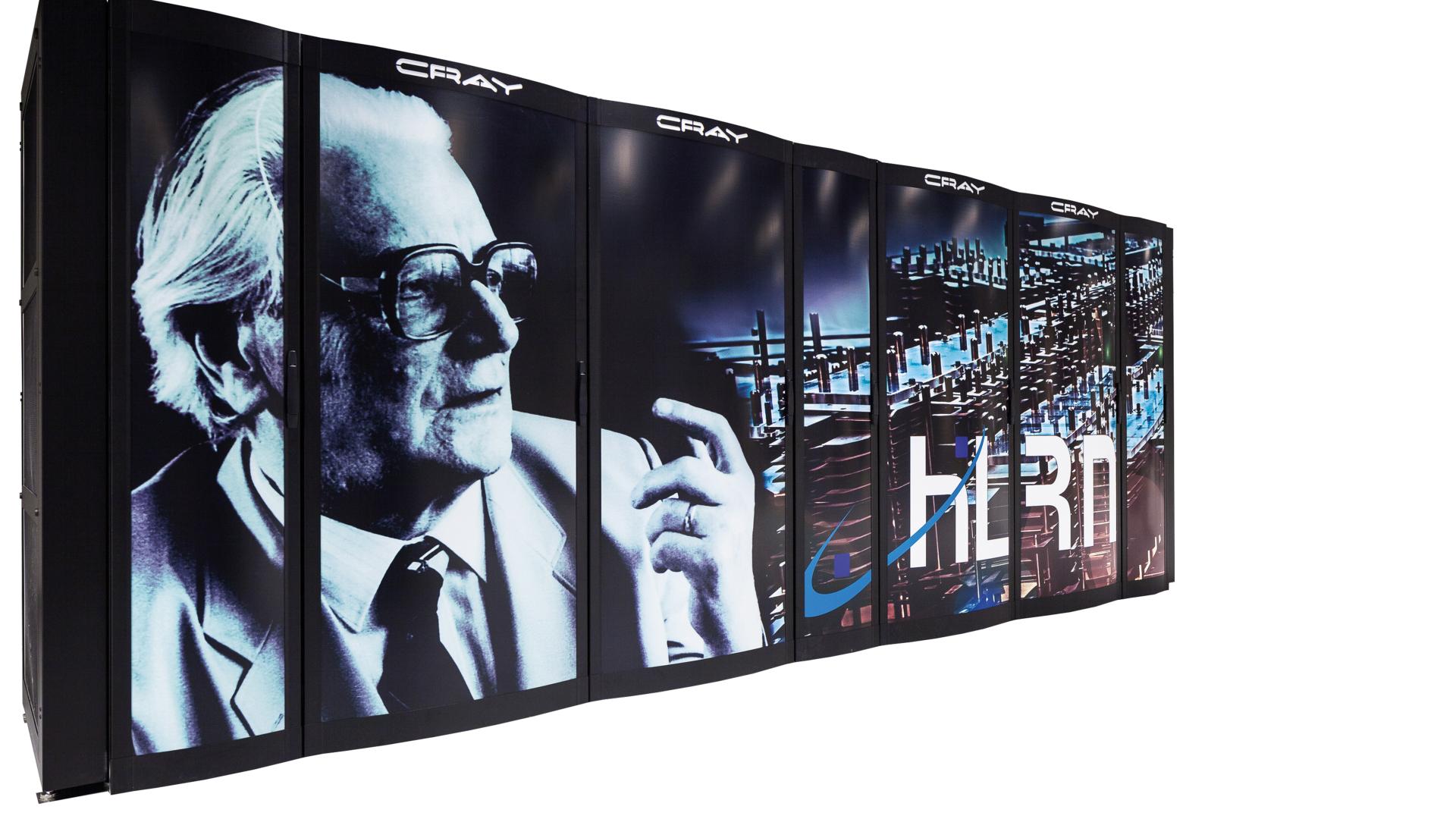

One of the compute cabinets of the Cray XC30 “Konrad” at ZIB (phase 1).

I/O BENCHMARKS

The I/O benchmark suite measures bandwidth (IOR) and metadata performance (MDTEST) of the global parallel file system. In particular, the I/O benchmark suite is used (i) to measure the I/O bandwidth to the global file systems HOME and WORK, and (ii) to evaluate the metadata performance of the parallel file system, i. e., the achievable file creation, remove, and stat operations per time.

PERFORMANCE RATINGMETHODOLOGY

Our goal was to maximize the work that can be carried out on the computer system over its total operation time. With the HLRN-III Benchmark Rating Procedure (described below) this overall serviceable work is calculated.

Our rating procedure is based on the fundamental assumption that the work which can be carried out by the system is equally split across the various user communities – the HLRN policy implies no bias towards a specific science and engineering field. Each of the stakeholders is ideally represented by one of the application benchmark. The achievable sustained performance of each of the application benchmarks determines the amount of work per community within the system’s operating time. Together with the assumption described above the geometric mean of all application benchmarks gives an average performance of a system configuration – the key performance indicator – which then can be translated to the target work value. To allow for flexibility in terms of up-to-date technology the vendors were allowed to offer a second installation phase with a technology-update or complete hardware exchange. In that case, the vendor has an additional degree of freedom in choosing an optimal point in time for the second installation phase. Again, the optimization target was to maximize the overall work which can be delivered by the system – now a time-weighted sum of each key performance indicator per installation phase.

For each of the application benchmarks a minimum performance was defined in the RFP making sure that the new system shows no performance degradation for a specific application case. Thus, the minimum performance serves as a strong penalty in the evaluation. The application performance is measured on a fully loaded system with as many application instances as possible. Application performance is either determined inside the application itself (if simple operation counts can be captured), or application wall-times are converted to performance numbers by predefined operations counts.

We did not want to leave the reader of this section without disclosing at least one benchmark number, that is the HPL performance. The two Cray XC30 systems of installation phase I are rated with a Linpack performance (Rmax) of 295.7 TFlop/s. With that, the two MPP components of HLRN-III were ranked at position 120 and 121, respectively, in the November 2013 issue of the TOP500 list.

OVERALL SYSTEM ARCHITECTURE

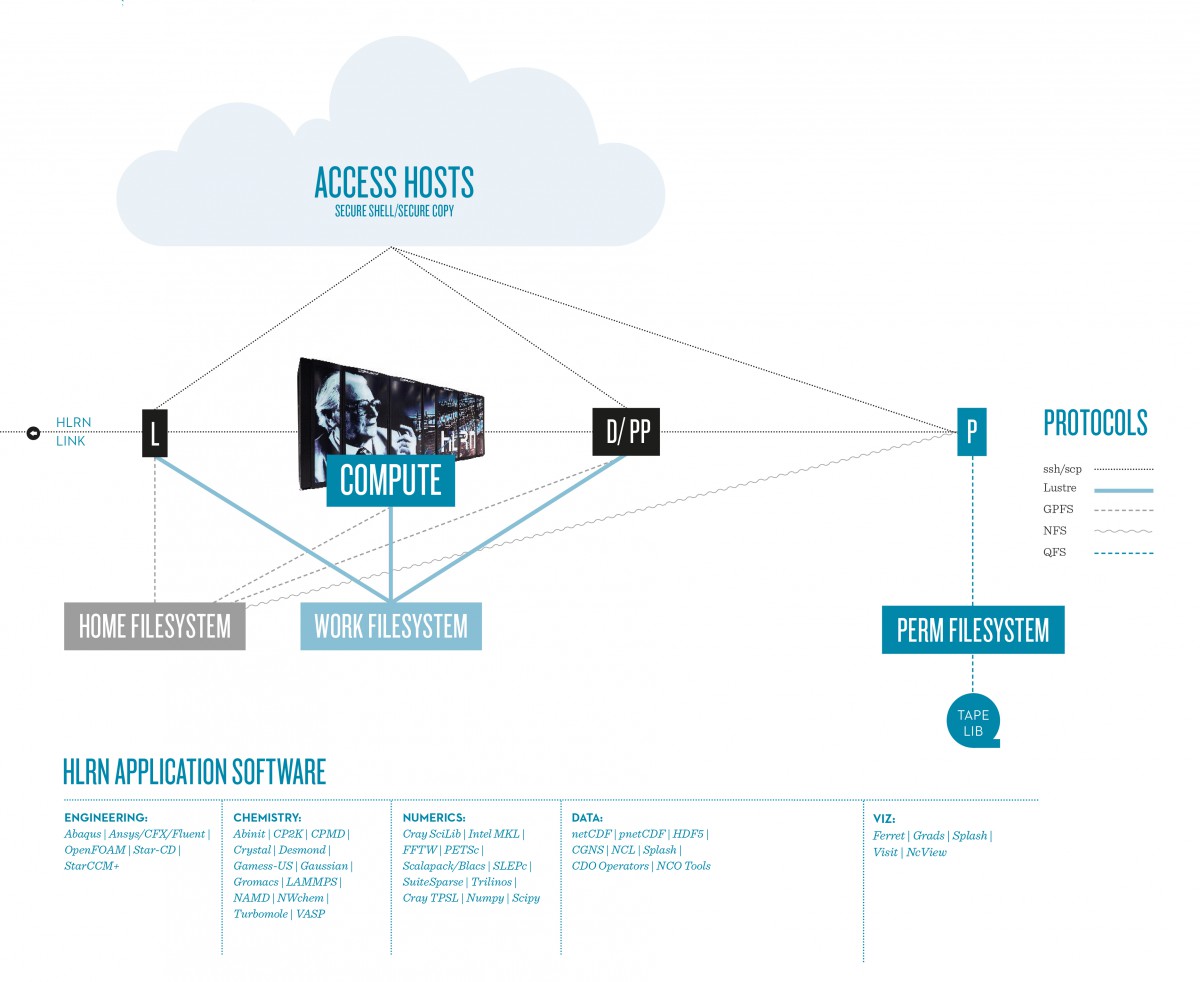

The two HLRN-III complexes, »Konrad« in Berlin and »Gottfried« in Hanover, are connected by a dedicated 10 Gb/s fiber link. The current installation (phase 1) comprises at each site a four cabinet Cray XC30 system of 744 nodes with 24 Intel Ivybridge cores each, 46.5 TByte distributed memory and 1.4 PByte on-line disk storage in a Lustre filesystem.

KEY HARDWARE PROPERTIES OF THE CRAY XC30 SYSTEM (PHASE 1)

Each of the two Cray XC30 compute system provides a peak-performance of 343 TFlop/s. The 744 compute nodes within one installation are connected via the Cray Aries network which provides scalability across the entire system. Within a node, 24 IvyBridge cores clocked at 2.4 GHz have access to 64 GByte memory per node. The compute and service nodes have access to global storage resources,i. e. a common HOME and a parallel Lustre filesystem. The HOME filesystem is implemented as network attached storage (NAS) with a total capacity of 1.4 PByte. The Lustre filesystem provides high-speed access to temporary, large data sets with a total capacity of 2.8 PBytes.

HLRN System Architecture per site: The two HLRN-III complexes with the compute system, login nodes (L), data nodes (D), post-processing nodes (PP), and archive servers (P).

SCIENTIFIC DOMAINS ON HLRN-III

WORKLOADS AND THEIR RESOURCE REQUIREMENTS

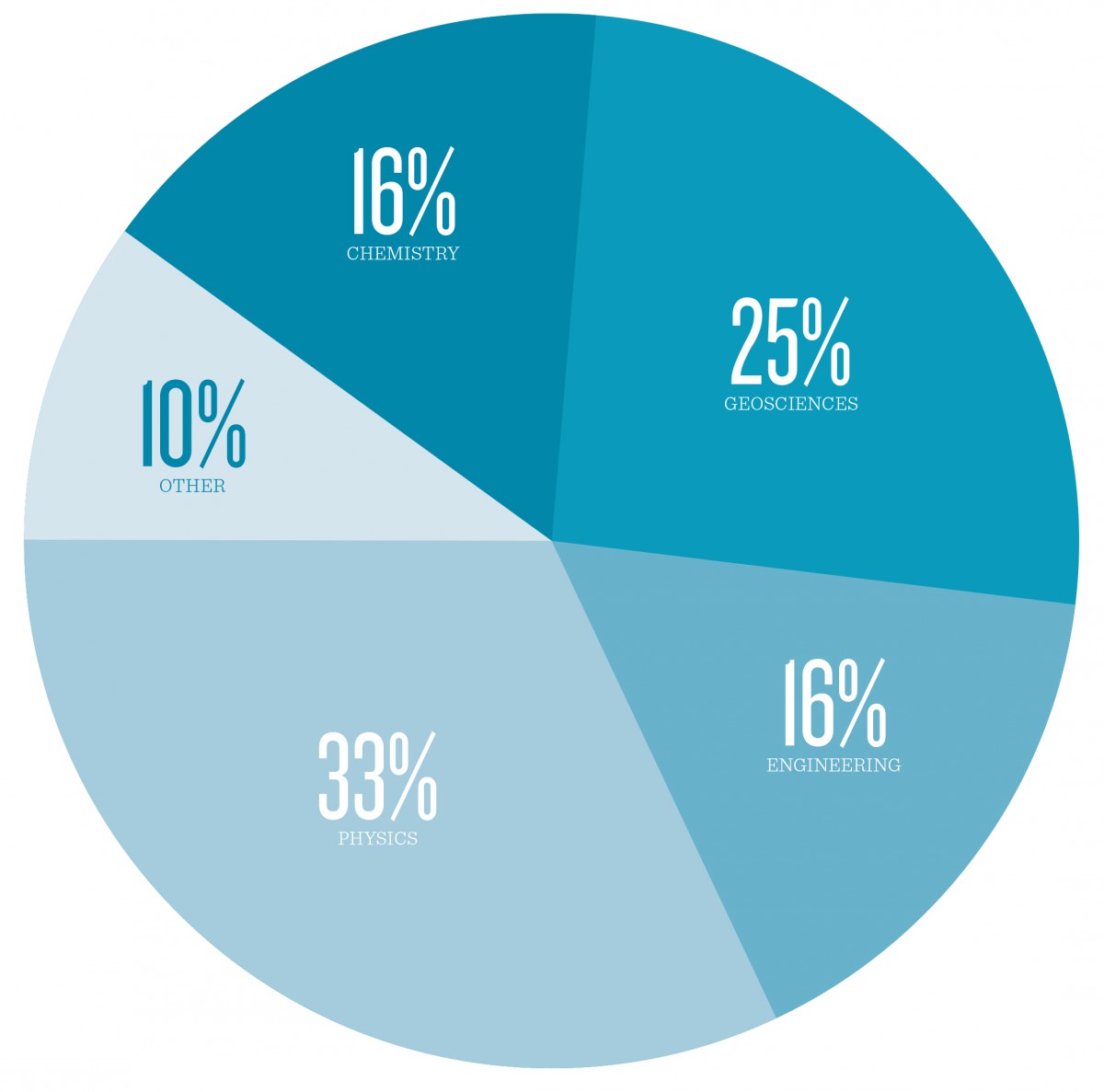

The projects at HLRN cover a wide variety of scientific areas and research topics with different computational demands. The pie-chart on the right illustrates the CPU time usage on the HLRN-II system in the year 2013 for the different scientific areas.

Projects of the second type conduct only a small number of simulations that require a long total runtime until the desired solution is obtained. Here each computation should be performed in one long run, requiring several days, weeks or even months of wall time. System stability considerations as well as fair-share aspects in the overall system usage have lead us to the policy of limiting the maximum job run time to 24 hours. For the affected projects this requires user initiated checkpoint and restart capabilities in their codes. These projects may require special treatment by the batch system (e.g. special queues, reservations). Climate development or long-term statistics for unsteady fluid-dynamic flow are typical examples for this type of work flow. The requirements of all other projects lies in between the described extremes.

The job sizes are dictated by the problem size in terms of memory requirements as well as the CPU time in regards to the time to solution. First the problem has to fit into memory. If parallel efficiency and scalability of the code and the problem allows, the number of used nodes can be increased to obtain a shorter time to solution. The workload is a broad mix of all kinds of job types. Among the projects we observe two extremes. The first type of projects submit hundreds or thousands of independent jobs (“job clouds”) that themselves are either moderately or massively parallel computations. In principle the workload presented by these projects is able to adapt to the system utilization. Projects in particle physics are prominent representatives for this kind of work flow.

Another aspect influencing the work flow of many projects is I/O. Here the requirements vary from very small data to be read or written to massive data transfer throughout the course of the computations. I/O can be concentrated to one or very few files on one side, while other projects use several files per MPI task, leading to I/O from/to several thousands to tens of thousands of files per job, written in each simulated time step. The overall amount of data transferred in one job can be in the order of several Terabytes.

Especially those projects with big result data sets face the problem of demanding pre-and post-processing procedures. This part of their work flow can often be more time consuming than the computations themselves. Thus these projects need to carefully balance their data production with the capabilities of pre-and post-processing their data.

CPU TIME USAGE ON THE HLRN-IIIN 2013

RESEARCH FIELDS UTILIZING THE HLRN-III

CHEMISTRY AND MATERIAL SCIENCES

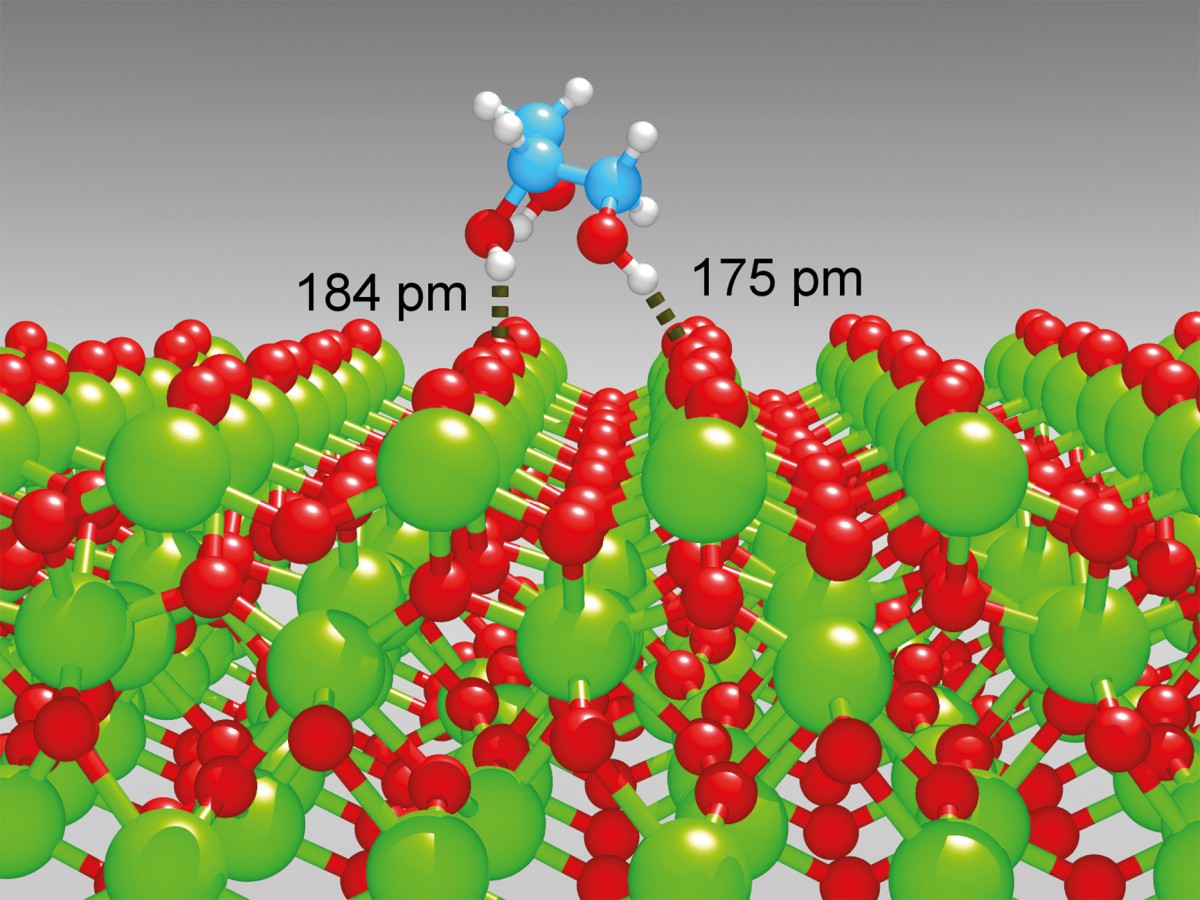

Numerical simulations of molecular complexes range from high-level ab initio electronic structure calculations on systems of only few atoms in size to coarse-grained dynamics simulations of macromolecular systems. The scientific projects employ either empirical inter-atomic potential functions or density functional theory (DFT) for the description of their model systems. DFT is the workhorse to perform electronic structure calculations on new materials for hydrogen storage, photoelectrolysis, molecular electronics, and spintronics. It is also employed at HLRN to describe properties of transition metal oxide aggregates, nanostructured semiconductors, functionalized oxide surfaces and supported graphene, as well as zeolite and metal-organic framework catalysts.

Hydrogen bond distances of a glycerol molecule adsorbed at the surface of a zirconia crystal

EARTH SCIENCES

The earth sciences domain plays an important role in the HLRN alliance. Projects are conducted in atmospheric sciences, meteorology, oceanography, and climate research. The PALM software – a parallelized large-eddy simulation model for atmospheric and oceanic flows – is developed at the Institute of Meteorology and Climatology (Leibniz University Hanover) and is especially designed for massively parallel computer architectures. The scalability of PALM has been tested on up to 32,000 cores and has been proven on the Cray XC30 at HLRN-III by simulations with 43203 grid points that require approx. 13 TByte memory.

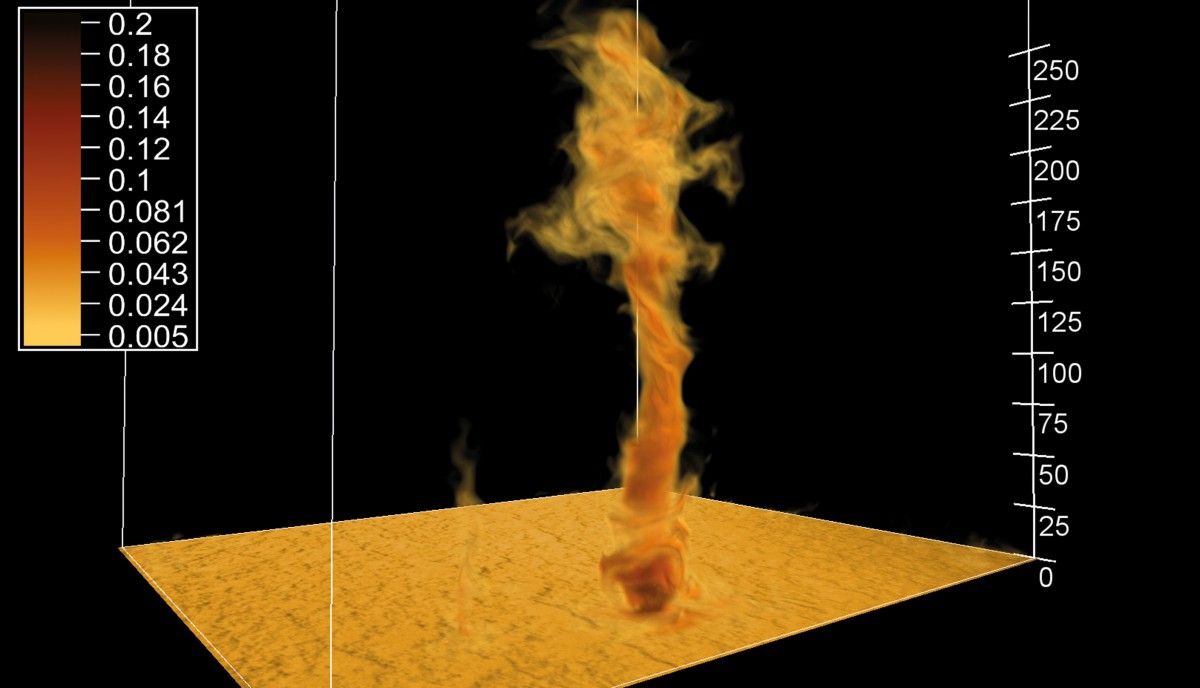

Dust devil simulated with PALM. The displayed area of 200x200 m2 represents only a small part of the total simulated domain.

PHYSICS

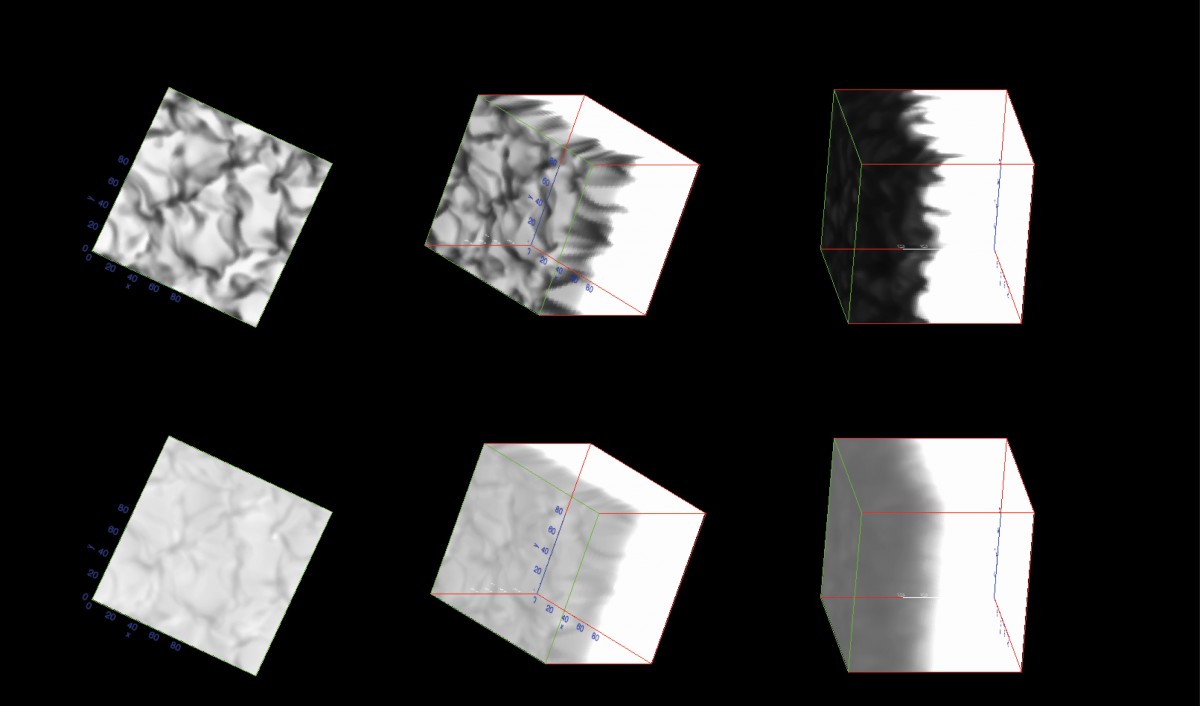

Scientific questions in elementary particle physics, astrophysics and material science are solved on the HLRN-III resources. The PHOENIX program – developed at the Atrophysics Inst. (University Hamburg) – is designed to model the structures and spectra of a wide range of astrophysical objects, from extrasolar planets to brown dwarfs and all classes of stars, extending to novae and supernovae. The main results from the calculations are synthetic spectra and images, these can be directly compared to observed spectra. PHOENIX/3D simulations show strong and weak scaling (94% efficiency) on up to at 131,072 processes. With that, 3D simulations which consider non-equilibrium thermodynamics produce a minimum of 2.6 TB spectra data, saving the complete imaging information would deliver about 11 PB raw data.

Large eddy simulation of passive scalar mixing at Reynolds number 10000 and Schmidt number 1000 along the centerline of a mixing device. The area of interest is 2x1x1mm resolved with approximately 200 mio. cells using OpenFOAM on 2000 cores.

ENGINEERING

Projects in engineering sciences come from the fields of fluid dynamics, combustion, aerodynamics, acoustics and mechanics with a focus on ship hydromechanics and marine technologies. Other research topics are flow optimization, aerodynamics and acoustics of planes, wings, cars, trucks, and trains, also in gas turbine engines, fans, and on propellers for higher flow efficiency. This area is also important in ship hull and propeller design with respect to drag and cavitation reduction and stability. Environmental aspects are important in research on fuel efficiency, pollutants and noise reduction in combustion processes.

Visualization of the results for continuum 3D radiation transfer for a stellar convection model for pure absorption (top panel) and strong scattering (bottom panel). A total of about 1.5 billion inten-sities are calculated for each iteration with the PHOENIX code.

THE MANY-CORE CHALLENGE

For the last fifty years, the number of transistors on processor chips doubledevery 18 to 24 months. This observation became a “law”, named after the Intel co-founder Gordon Moore, who first described the trend in his famous 1965 article.

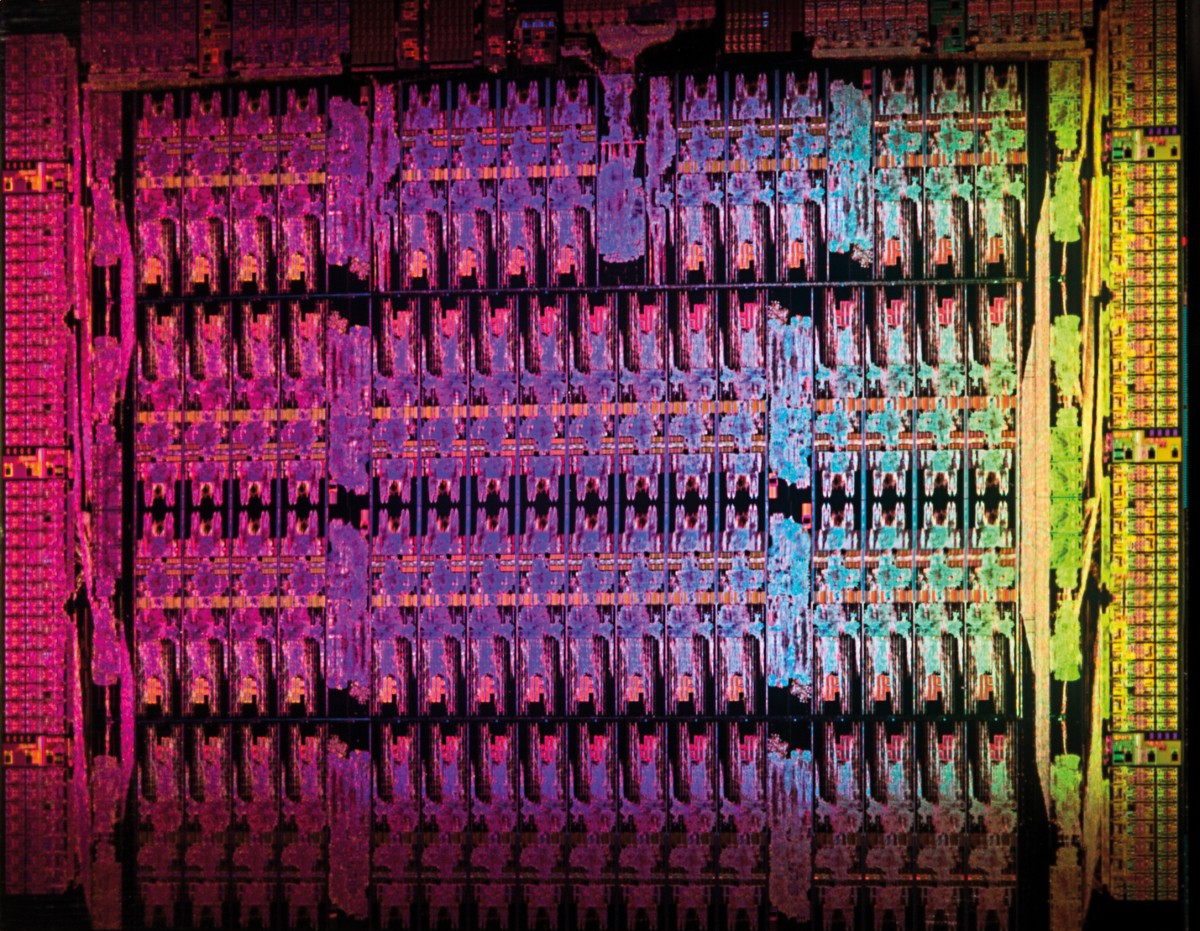

The Intel Xeon Phi die with its 61 cores.

Folks wisdom simplified Moore’s law to the doubling of single processor performance every 18 months. Interestingly, this “law” was a perfect orientation line for many decades. But why should we expect this trend to continue in the future? Physical and architectural restriction cannot be denied. Today, the number of transistors on a chip still increases at a fast pace, but the transistors’ switching capabilities are now used to put more processor cores onto a chip. Today, multi-core chips with 2 to 12 cores are standard computing devices and many-core-chips with 60 and more cores are also available. We believe that the trend to many-core CPUs will become mainstream in high-performance computers. Future supercomputers will comprise heterogeneous compute and storage architectures to leverage compute and storage resources without sacrificing the power envelope.

»As we look to reaching exascale high performance computing by the end of the decade, we have to ensure that the hardware doesn’t get there and leave the applications behind. This means not only taking today’s applications and optimizing them on parallel architectures, but also co-designing future application software and the future versions of these architectures to achieve optimal performance at all levels from the computing node to the entire system.« Raj Hazra, VP of Intel’s Architecture Group

PREPARING FORTHE MANY-CORE FUTURE

With respect to the envisaged growing importance of many-core computing in HPC, the Parallel and Distributed Computing group at ZIB evaluated disruptive processing technologies, including field programmable gate arrays (FPGAs), the ClearSpeed processor, and the Cell Broadband Engine (Cell BE) (FN:P. May, G. W. Klau, M. Bauer, T. Steinke {2006}. Accelerated microRNAPrecursor Detection Using the Smith-Waterman Algorithm on FPGAs. In Werner Dubitzky, Assaf Schuster, Peter M. A. Sloot, Michael Schroeder, and Mathilde Romberg, editors, GCCB, volume 4360 of Lecture Notes in Computer Science, pages 19–32. Springer.), (FN:T. Steinke, A. Reinefeld, T. Schütt {2006}. Experiences with High–Level Programming of FPGAs on Cray XD1. In Cray Users Group {CUG 2006}.), as well as general-purpose graphics processors (GPUs) from Nvidia (FN:T. Steinke, K. Peter, S. Borchert {2010}. Efficiency Considerations of Cauchy Reed- Solomon Implementations on Accelerator and Multi-Core Platforms. In Symposium on Application Accelerators in High Performance Computing {SAAHPC}.), (FN:Wende, F. Cordes, T. Steinke {2012}. On Improving the Performance of Multithreaded CUDA Applications with Concurrent Kernel Execution by Kernel Reordering. Symposium on Application Accelerators in High-Performance Computing {SAAHPC}, 0:74–83.) and recently the Intel MIC architecture (FN:F. Wende, T. Steinke {2013}. Swendsen- Wang Multi-cluster Algorithm for the 2D/3D Ising Model on Xeon Phi and GPU. In Proceedings of SC13: International Conference forHigh Performance Computing, Networking, Storage and Analysis, SC ’13, pages 83:1– 83:12, New York, NY, USA. ACM.).

In 2013, our research in the area of many-core computing was recognized by Intel, who established one of only five worldwide Intel Parallel Computing Centers at ZIB. Because of our deep expertise in the efficient use of many-core architectures for HPC codes, Intel and ZIB jointly established a “Research Center for Many-core High-Performance Computing” located at ZIB. This center fosters the uptake of current and next generation Intel many-and multi-core technology in high performance computing and big data analytics. Our activities are focused on enhancing selected workloads with impact on the HPC community to improve their performance and scalability on many-core processor technologies and platform architectures.

The selected applications cover a wide range of scientific disciplines including materials science and nanotechnology, atmosphere and ocean flow dynamics, astrophysics, drug design, particle physics and big data analytics. Novel programming models and algorithms will be evaluated for the parallelization of the workloads on many-core processors. The workload optimization for many-core processors is supported by research activities associated with many-core architectures at ZIB. Furthermore, the parallelization work is complemented by dissemination and education activities within the North-German Supercomputing Alliance (HLRN) to diminish the barriers involved with the introduction of upcoming highly parallel processor and platform technologies.