Preserving our digital heritage

Even supposedly archetypical analogue areas such as those venerable institutions preserving our cultural heritage or humanities research, have arrived in the digital age. Thus, archives digitize files and estates in order to save them from decay. These collections archive increasingly more digitally born files.

Museums present digital representations of their analogue collections in portals, such as the German Digital Library or the Europeana, entire art forms such as video installations, are developed purely digitally. Libraries buy electronic books, journals and databases and administrate licenses instead of shelf metres. But, also science acts digitally. Increasingly, not only publications are considered to be the result of often long years of scientific work, but also the underlying research data. Besides classical research data such as results of measurements or observations, due to the increasing digitization of humanities, data records that supposedly do not fall into the area of research also become the subject of investigation. For instance, social behavioural patterns may even be extracted from series of “Twitter feeds” that appear superficial. Another research area in humanities is concerned with investigations about how far modern, to a certain extent equalizing communication channels may even influence the development of societies.

An example for this is the “Arab Spring”, which gained significant momentum by the uncensored coverage in so-called “Social Networks”. Due to their temporal relevance, these data collections are, comparable to atmospheric measurements in Antarctica, unrepeatable, irretrievable and therefore a treasure for future generations of researchers, which should be preserved carefully. With the digitization of society, culture, research and science, a digital cultural heritage is growing day by day, which demands sustainable preservation.

Digital cultural assets – just like analogue objects – must also be administrated, preserved, maintained and be made available on a permanent basis by institutions of cultural heritage. The new digital collections pose enormous challenges on cultural heritage and research institutions. The long-term and trustworthy safeguarding of the availability of digital information comprises the entire life cycle of digital objects which includes data management as well as rule-based enrichment and processing of data, the digitization and the persistent long-term availability of information. All this can not be mastered by small and medium institutions alone.

In Berlin, it has been decided to opt for a cooperative way to digitize cultural assets and ensure their long-term availability. In 2012, the Cultural Affairs Department of the Federal State of Berlin initiated an interdisciplinary digitization support programme that was hitherto unprecedented in the whole of Germany. With this initiative, digitization projects in various institutions of cultural heritage in Berlin are supported financially, and a central consulting and coordination office of the Federal State was established. Since 2012, ZIB has hosted this Service Center for Digitization Berlin, in short digiS. The tasks of digiS start with a sustainable definition of digitization. digiS networks the individual projects of the support programme, provides consulting services for the implementation of digitization and aims at enabling institutions to play an active role in their digital practice. The projects supported represent a cross-section of the cultural landscape in Berlin. In total, 17 projects in 11 institutions have been supported by 2013, mainly in the area of museums. A survey of the projects can be found on the digiS Website: www.servicestelle-digitalisierung.de. All projects within the Berlin support programme aim at the preservation of the stock just as well as at the creation of new entries to the collections. Another goal is to pave the way into the large national and European cultural portals such as the German Digital Library and the Europeana.

© Sergey Yarochkin - Fotolia.com

Community and capacity building

DIGIS, KOBV, Museum project at ZIB

If you understand digitization merely as photographing or scanning of an object or the coding of an audio recording, you are aiming too short. The process starts much earlier with a comprehensive description of the formal aspects and the content of the objects. This is the only way to capture contexts and keep an overview over collections containing millions of objects. Inventory-taking or indexing, and thus the capture of metadata, precede and accompany every digitization project. In institutions of cultural heritage, this process is largely standardized. However, there is no obligation to apply these standards homogeneously. This is where the longest-standing project at ZIB has its scope of functions. The museum project develops and maintains comprehensive software for the indexing and administration of museum collections and stocks (GOS). Furthermore, the working group is doing research in the area of data modelling and data exchange. While the digital wave has demanded new structures for museums only recently, libraries, to stay with the metaphor, have already become experienced surfers. Electronic search in catalogues, defined metadata schemes for indexing, administration of electronic journals, ebooks and multimedia access portals are concepts with high penetration in the library world. The Cooperative Library Network Berlin-Brandenburg (KOBV), which has been located at ZIB since 1997, supports its more than 80 member libraries by rendering development work and services in the area of indexing, presentation and search systems for libraries. Since the Library Congress 2013 in Leipzig at the latest, German library networks are also discussing the administration and long-term preservation of research data. Since 2011 at KOBV, research data management beyond classical biographical description of library catalogues has been investigated in the project EWIG; starting in 2014, a distributed Humanities Data Center (HDC) will be developed.

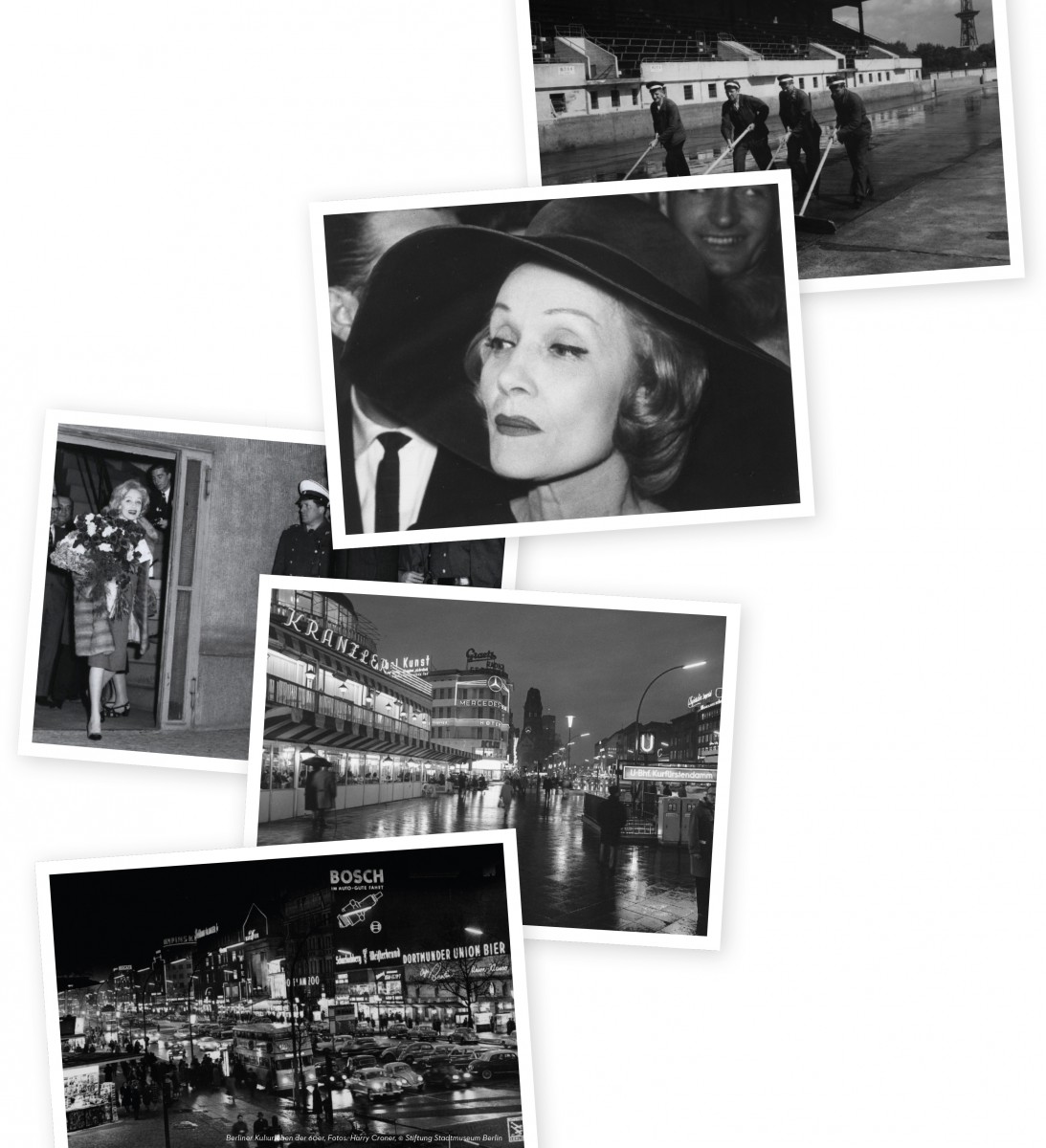

Berliner Kulturleben der 60er, Fotos: Harry Croner, © Stiftung Stadtmuseum Berlin

‘Berliner appell 2013’ – Longterm availability as a cooperative task

The joint central research task for all projects within the Scientific Information Department (SIS), is the actual safeguarding of long-term availability of data. By the support programme of the Federal State of Berlin, Service Center for Digitization Berlin (digiS) has been given the mandate to ensure the long-term availability of digital reproductions. In September 2013, ZIB signed the so-called “Berliner Appell”, which calls for joint reflection about the future of our digital society and the preservation of our digital cultural heritage. Since 2013, ZIB has also been a regular member in the Nestor Network of Expertise, thereby actively taking part in the shaping of national structures in this area.

Long-term preservation strategies at ZIB

Since more and more of the output of art and science is born digital, the access to it becomes more and more fragile. For rendering contemporary digital cultural manifestations, a complex infrastructure is needed: Starting with the description of the data formats, via the software needed to interpret them up to the hardware running this software. If you are lucky to find the remnants of an ancient culture written in stone, all you need is an understanding of the language. The rendering process is no problem, since eyesight and sunlight are more or less stable for the next millennia. But imagine someone finding an eighty-year old 5.1/4” floppy disk. Given that the magnetization is still intact; do you have a reading device for it? Are you able to determine the file format? Do you happen to have a version of the word processor or worse the database application used to create it? The answer to all that questions is most probably: no.

For digital objects to stay usable in the future, you actually do have to preserve them as intellectual entities in their complex context, but change them, if needed, on a technological level in order to guarantee their long-term accessibility. Digital preservation is an actively maintained process in the presence ensuring the access to digital objects in the future. There are still lots of −analogue as well as digital− recordings, photographs and measurements that are stored without proper care. In geosciences there is even an annual data rescue award, “to improve preservation and access of research data, particularly of dark data, and share the varied ways that these data are being processed, stored, and used.”

To perform a proper digital preservation, you have to be able to understand the objects you are curating. This is only possible in the future if some criteria are met: One is the open availability of the format specifications, the other is the lack of any patent or other right restraining the free use of the standard. These two characteristics are not as widespread as we would like. Some file formats have been patented by the inventors. So, if you want to deal with that format in your software, you have to pay a license fee. Even worse, you could be forced to work with proprietary parts of vendor-provided software. Open Standards is a key issue in long-term preservation. That mindset becomes more common with the open access and open knowledge movement.

The Wikimedia Foundation for instance describes its mission to: “empowerand engage people around the world to collect and develop educational content under a free license or in the public domain, and to disseminate it effectively and globally”.

Another actor in the field, the Open Knowledge Foundation, states its vision as: “We believe that a vibrant open knowledge commons will empower citizens and enable fair and sustainable societies.” What is Open Knowledge? ‘Open knowledge’ is any content, information or data that people are free to use, re-use and redistribute – without any legal, technological or social restriction.

In that line of thought belongs also the Free and Open Source Software (FOSS) Movement, which spawned not only some of the software that is today’s backbone of the Internet like the Apache Web Server, but also a vast amount of tools used in the long-term preservation area. Some of these tools are used in a test bed installation of an OAIS compliant archive at ZIB. ZIB itself has more than 15 years of experience in bitstream preservation, which is nowadays more often dubbed ‘passive preservation’ compared to the actively maintained process described in the OAIS model.

Offene Festplatte, Tim Hasler, cc-by-nc

Open source in long-term preservation

Building on the extremely robust storage infrastructure at ZIB, a long-term archiving system with different service levels to preserve technical availability as well as availability in terms of content also for heterogeneous data, is to be developed. The individual service levels are directly dependent on the requirements of the various partners (institutions of cultural heritage and research institutions). In this way, the previous approach of a “Swiss bank deposit box” will be complemented successively by standards-compliant services for long-term archiving.

First tests with the open-source system Archivematica have convinced particularly because the business logics of the OAIS model (see above) can be mapped by means of extremely transparent micro services that can be modelled on a granular level. In this way, diversity of data as well as partner-specific requirements for individual long-term availability / curation levels can be taken into account. In its functionalities and services, Archivematica does not orient itself towards the implementation of a complex overall system, but institute-specific preservation policies and the tasks and goals embedded there. Jointly with the Yale University Library, MoMA, the University of British Columbia and the Rockefeller Archive Center, ZIB is engaged in the further development of Archivematica.

Digital humanities and humanites data center

The term Digital Humanities originally just referred to computer-assisted methods in the humanities. The increased opening of digital data sources, the world-wide networking of these resources, and the possibility to process huge amounts of data (keyword: Big Data) have widened the concept of Digital Humanities in the recent years.

Digital research data infrastructures have been present for more than 50 years, e.g., in the geosciences with their World Data System. So far, most of the humanities neither have research data infrastructures nor the resources to run them on their own, although there is a growing need for quality-assured long-term archiving, re-use of and online access to digital research data, too. Some digital research collections for the humanities are already maintained by institutions such as DNB, DDB, Europeana or IANUS. They, however, cover only a fraction of the relevant research data.

The purpose of the Humanities Data Center granted in 2013 (and funded by the Niedersächsisches Ministerium für Wissenschaft und Kultur for the period 2014 -2016) is to assume responsibility for data hitherto not available in sufficient digital quality for research purposes and for research data from branches of the humanities currently having no corresponding long-term repository. The partners of ZIB in this project are: Berlin-Brandenburgische Akademie der Wissenschaften, Gesellschaft für wissenschaftliche Datenverarbeitung Göttingen, Akademie der Wissenschaften zu Göttingen, Max- Planck-Institut zur Erforschung multireligiöser und multiethnischer Gesellschaften Göttingen, Niedersächsische Staats- und Universitätsbibliothek Göttingen.

Bandroboter, Jürgen Keiper, cc-by-nc

Ewig

EWIG stands for “Entwicklung von Workflowkomponenten für die Langzeitarchivierung von Forschungsdaten in den Geowissenschaften” (Development of workflow components for the long-term archiving of research data in geosciences).The project funded by the DFG aims at supporting the transfer of research data from different research environments into digital long-term archives. For this purpose, EWIG has identified three focal points. On the one hand, the development of rules for the handling of research data that should be generic in the beginning and ideally be accepted in the entire institution later, so called policies. On the other hand, exemplary workflows are developed, which enable the transfer of research data to an OAIS-compliant long-term archive (see ISO Standard 14721:2012) on the basis of Free and Open Source Software (FOSS) components. The third aspect addresses topic-specific qualification. In this context, ‘archived’ research data are analyzed in a manner of speaking as an archaeological item in geoscientific teaching. The student learns what type of metadata is relevant and required for the interpretation of preserved data. This is accompanied by a handout to teachers for teaching in the field of research data management.

Project partners: ZIB, Helmholtz-Zentrum Deutsches GeoForschungsZentrum Potsdam and Institut für Meteorologie der Freien Universität Berlin. Funding: DFG. Duration: 2011-2014.

Zersetzter Film in Dose, Jürgen Keiper, cc-by-nc

Access to content

In order to enable comfortable access to saved data, the use of a repository middleware is required. The open-source software Fedora Commons and Islandora as potential Web front end are currently being evaluated with regard to search and access to the data of the long-term archive. Fedora is to be used as repository for the data storage of derivatives and/or the associated metadata and should enable the continued right and role-based access to these data as well as the master files in the long-term archive – also in the form of data harvesting via standardized and open protocols, such as the Protocol for Metadata Harvesting by the Open Archives Initiative (OAI-PMH).

Thus, not only the institutions themselves, but also the broad public has transparent access to these data. This is a significantly more differentiated use of data, not only for science, but also, e.g., in the area of education or the pursuit of personal interests. Furthermore, contextualization and thus enrichment of data is made possible, thus creating additional added value (FN:A. Müller, B. Kusch, K. Amrhein and M. Klindt {2013}. Unfertige Dialoge - Das Berliner Förderprogramm Digitalisierung Bibliotheksdienst 2013; 47{12}:931-942.). For a permanent, unique identification of these research data ZIB has become a data center in the context of the DataCite DOI scheme. Based on a contract with the TIB Hannover, ZIB can mint its own DOIs. Due to the handle system underlying the DOI concept, the URL stored for a representation may change, the object, however, remains reliably citable and identifiable by means of the DOI -as long as the URL is adjusted, if a change has been made. Thus, a unique and permanent public referencing of these objects is possible for users

For the future it is planned that ZIB not only assures the long-term preservation of (research) data from cooperation partners of digiS and from projects within the support programme, but also for data from member libraries of the KOBV as well as associated research partners. Since, as described above, in the sense of the ‘Open Science’ paradigms the data records underlying publications are moving into the focus of research, archiving these data records is becoming indispensable.

Open culture, open knowledge, open access

Today ensuring the long-term availability of our digital culture and research is no longer on the political agenda of Berlin alone, but also throughout Germany. In Berlin, with the support of ZIB a start has been made towards dedicating this topic the social attention and publicity that is appropriate and necessary in view of its relevance.

The project-related support of individual projects defined in the support programme of the Federal State of Berlin in conjunction with the accompanying development of an organizational and technical infrastructure at ZIB, takes digitization / long-term preservation out of its niche that has become too narrow and turns it into a task that has to be dealt with strategically at ZIB and beyond.

With its existing infrastructure and services as well as due to its networking capabilities and outstanding cooperation among different projects and institutions (digiS, KOBV, museum project, ITS), ZIB offers excellent opportunities to tackle this not only technical but also social task. A start has been made – you learn on the way.

Museum project

The project “Development of information technology tools for museums” has been in place since 1981 and is therefore older than ZIB itself. Its tasks include on the one hand the IT-technical support of inventory and documentation projects in museums and on the other hand the communication and distribution of internationally recognized standards within the German cultural heritage institutions.

Software

The basic software for all projects is the GOS database system, which was originally developed by the British Museum Documentation Association (MDA, in the meantime renamed to Collection Trust) especially for use in inventory and documentation in museums. Since 1981 it has been further developed by ZIB and is made available to interested museums.

Due to close contacts to decision-making bodies, e.g. the “Fachgruppe Dokumentation” in the Deutscher Museumsbund and the CIDOC (Comité international pour la documentation des International Council of Museums), ZIB is now well established in the field of museum documentation. The autumn meeting on museum documentation, which each year is jointly organized by the Fachgruppe Dokumentation and the Institut für Museumsforschung, has traditionally taken place at ZIB for more than 15 years now.

Members of the museum project participate in the “data exchange” working group, which was founded by the Fachgruppe Dokumentation. The working group is involved in the principles of structuring data required for the inventory of museum objects and examines (metadata) formats with respect to their usability for the needs of the different collections. The basis for this is the “Conceptual Reference Model”10 (CRM), which has been developed by the CIDOC. The working group is also active in maintaining the data harvesting format “LIDO” (http://network.icom.museum/cidoc/working-groups/data-harvesting-and-interchange/what-is-lido/), which has become the standard format for many museum portals.

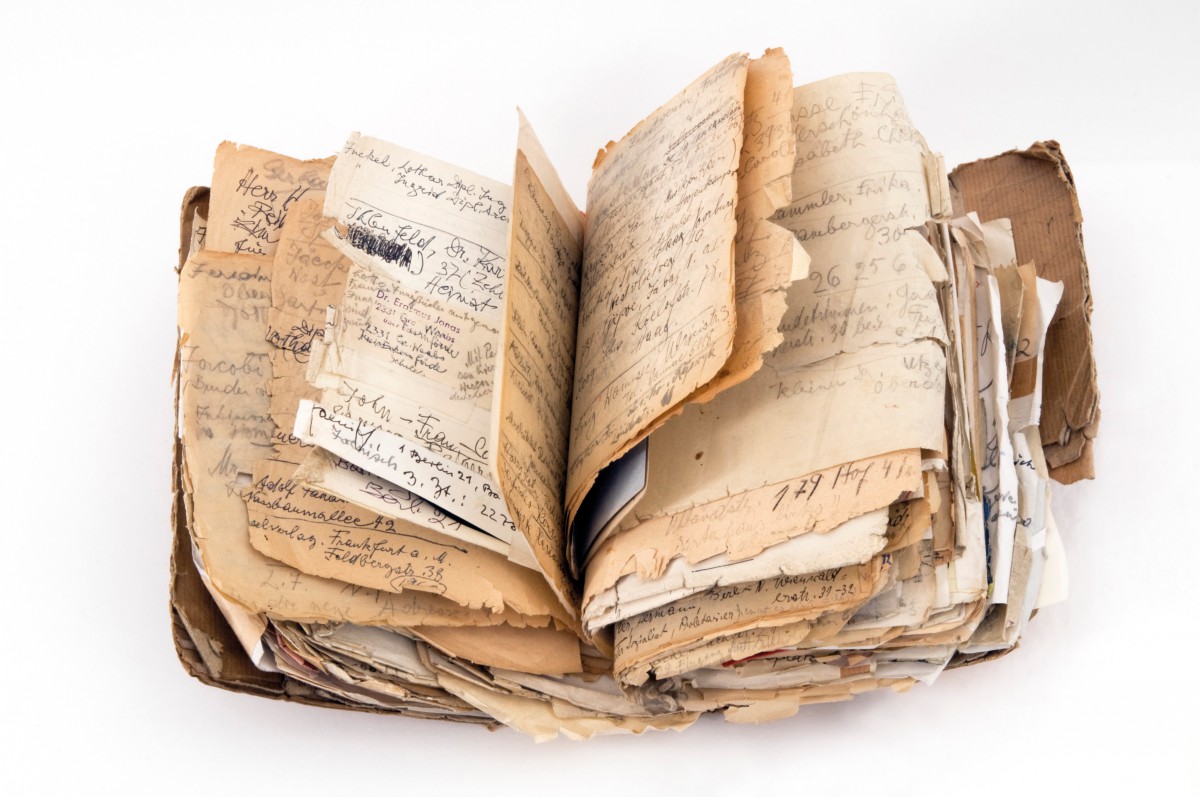

Adressbuch Hannah Höch, Foto: Kai Annett Becker, © Berlinische Galerie