Utilizing shared heterogeneous storage resources efficiently storage resources efficiently

Data volume, storage capacity, data exchange, and digital collaboration over the internet have grown rapidly over the last few years. Distributed storage “in the cloud” became common day-to-day practice for many end users, and ‘Big Data’ is the new must-have in business and science. But what does the corresponding storage infrastructure look like and what can be done to provide predictable performance and efficient use of the costly resources – a mix of fast, but expensive, flash memory, spinning, magnetic discs, and classic tape storage? ZIB addresses these questions and contributes advanced solutions.

Users expect predictable performance and guaranteed capacity for shared storage systems, Hans Splinter (cc by-nd)

Scalable Storage Systems

Scientific applications as well as commercial cloud services generate large amounts of data that may range in the area of multiple petabytes or even beyond. Storing such volumes of data requires thousands of disks and servers. Managing such infrastructure effectively, where the failure of storage devices, whole servers, or network links is the norm rather than the exception, is challenging.

Various large-scale distributed storage systems exist and are accessible through different interfaces that vary from the traditional POSIX-compliant file system interface to simplified object-based access like Amazon’s S3 and databases that offer ACID semantics, such as Amazon’s Relational Database Service (RDS). In the following, we focus on storage systems that can be accessed via the POSIX file-system interface.

What distributed storage systems have in common is that they are usually accessed by multiple users concurrently. Users may influence the performance of each other’s applications significantly. They may share network links, read or write request queues, and may delay each other due to limited concurrency abilities of the underlying storage hardware.

Distributed File Systems

Distributed file systems have been developed for many years. The Network File System (NFS) was one of the earliest implementations, typically relying on a single server with direct attached storage or remote disks and connected via a storage area network (SAN). The storage devices themselves provide a block interface where fixed-size data can be accessed at defined block addresses. Although NFS supports concurrent access to files over the network and can be scaled to huge capacities by using a SAN, the single server remains a potential performance bottleneck and a single point of failure.

Cluster file systems like GFS2, OCFS2, and SanFS are more scalable and allow clients to access data directly on shared block devices, such as a SAN, while concurrent accesses are coordinated by a lock manager or a central metadata server. This file-system architecture often requires expensive SAN hardware and trusted clients due to limited permission management on the block devices.

Object-based Storage

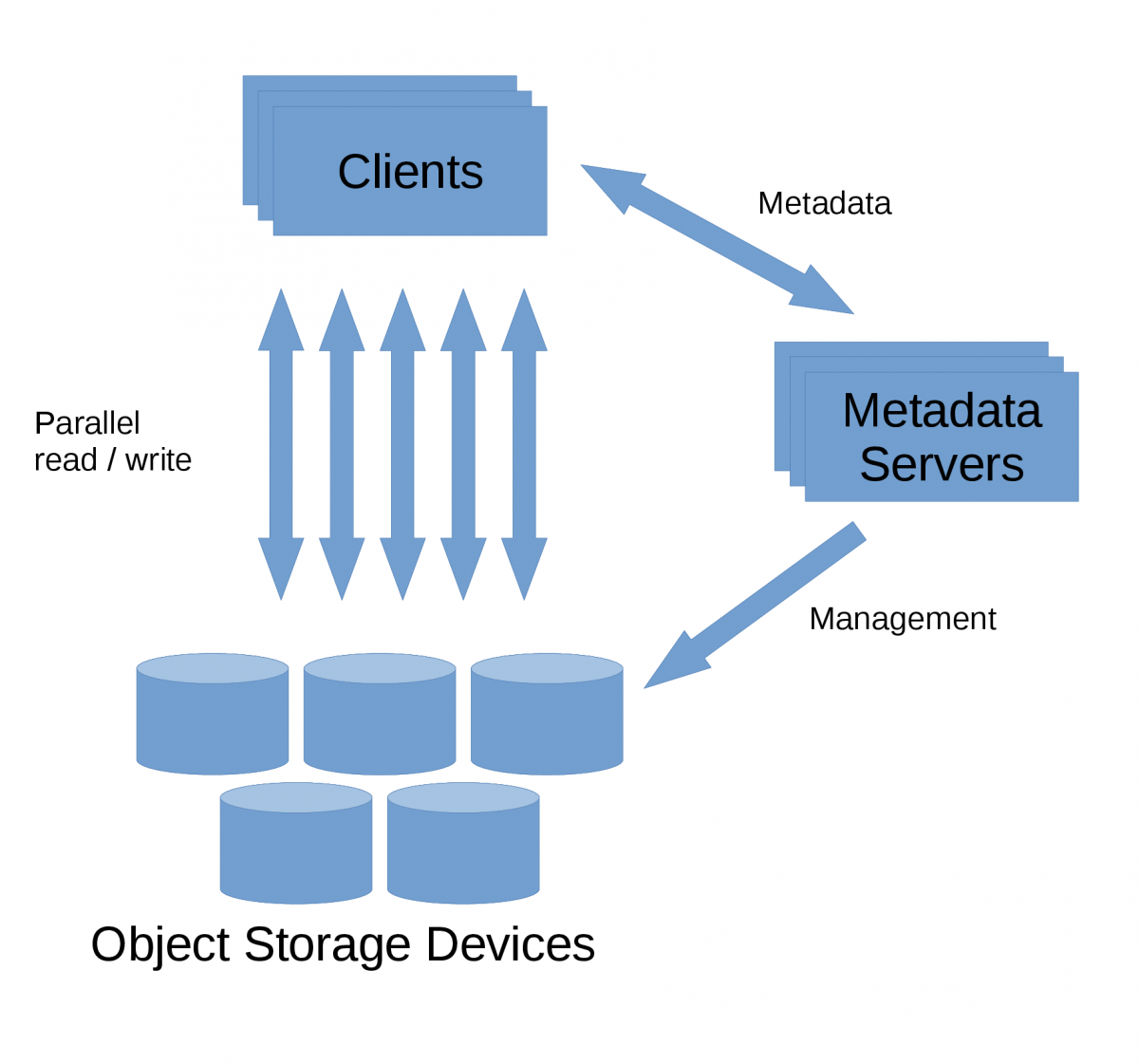

The next advancement of distributed file systems is object-based file systems (FN: M. Mesnier, G. R. Granger, E. Riedel {2003}. Object-based storage. IEEE Communications Magazine, Volume 41, Issue 8), which separate the handling of the file content and metadata. File content is stored on object storage devices (OSDs). while file metadata is stored and managed by dedicated metadata servers.

Object-based file system architecture with Object Storage Devices, Metadata Servers and Clients.

In contrast to a block device, object storage devices can store data chunks of arbitrary size and are addressed by a user-given object identifier. Object storage devices themselves use block devices internally – for example, HDDs or SSDs – while the low-level block allocation is handled locally by the machines running the OSD servers.

File access in an object-based file system is usually initiated by an open call sent to a metadata server. The metadata server checks the access permissions and returns which OSDs serve the file, typically including a capability object that allows the client to authenticate to the OSDs and authorizes it to read or write the file.

Storage at various levels of scale, cobalt123 (https://www.flickr.com/photos/cobalt/6041698818, cc by-nc-sa), Travis Wise (cc by), UBC Library (cc by-nc-nd)

The Distributed File System XtreemFS

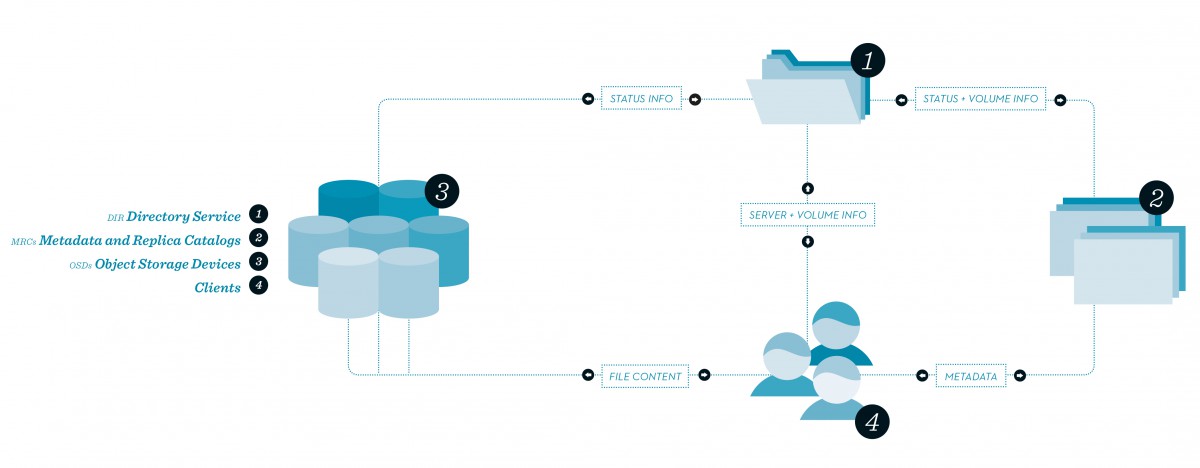

Our distributed and object-based file system XtreemFS (FN: J. Stender, M. Berlin, A. Reinefeld {2013}. XtreemFS - a file system for the cloud. Data Intensive Storage Services for Cloud Environments.) was developed at ZIB since 2006. XtreemFS consists of metadata and replica catalogs (MRCs), object storage devices (OSDs), and a directory service (DIR).

XtreemFS architecture consisting of a directory service, metadata and replica catalogs, and object storage devices.

DIRECTORY SERVICE

The directory service allows clients to discover the other server components of an XtreemFS deployment. MRCs and OSDs register themselves with their addresses in the directory service.

METADATA AND REPLICA CATALOG

XtreemFS MRCs store all file metadata; that is, the names and hierarchy of files and directories and their attributes like permissions, file sizes, or time stamps. The MRC is responsible for permission checking while opening files. The DIR and MRC services store their data in the key-value store BabuDB, which has been designed with the aim of storing file-system metadata. BabuDB (FN: J. Stender, B. Kolbeck, M. Hogqvist, F. Hupfeld {2010}. BabuDB: Fast and efficient file system metadata storage. International Workshop on Storage Network Architecture and Parallel I/Os.) uses a Log- Structured Merge-Tree (LSM-Tree) data structure that holds large amounts of the database in memory and has support for asynchronous checkpoints. The DIR and MRC services can be made fault-tolerant by utilizing BabuDB’s replication.

Object Storage Devices

File content is stored on XtreemFS OSDs. The OSD service splits files into chunks of a configurable size and stores each chunk in an own file in a local file system. By utilizing a local file system, a wide range of storage devices like spinning disks, SSDs, or RAM disks are supported without dealing with low-level properties of these devices or worrying about block allocation.

Files can be striped similarly to a RAID 0 over multiple OSDs to utilize the capacity and throughput of multiple OSDs for one file. Furthermore, files can be replicated to multiple OSDs. XtreemFS offers different replication policies to cover multiple use cases. The most common replication policy is a quorum based protocol that allows concurrent read and write access to the same files while preserving POSIX semantics.

ACCESS PATTERNS AND STORAGE-PERFORMANCE CHARACTERISTICS

Predicting the performance of storage systems with users concurrently accessing data requires detailed knowledge about the device characteristics as well as the user’s access pattern.

An object storage device can be backed by different types of storage devices with different characteristics. These storage devices may be spinning disks (HDDs), solid-state drives (SSDs), an HDD or SSD array that increases performance and capacity and adds redundancy (RAID), or hybrid devices like a RAID of HDDs with an SSD cache. All have different access-performance characteristics.

Application Access Patterns

Storage-access patterns vary for different applications. Many parallel applications like MapReduce jobs or HPC applications that write checkpoints have purely sequential data access. Other applications like relational databases access files at random offsets. Also applications with a fraction of sequential and random access are possible – for example, a relational database that also writes a transaction log. We focus on the cases at both ends of the spectrum. While sequential throughput is measured in MB/s, random throughput is usually measured in IO operations per second with a size of 4 kB (IOPS).

Spinning Disks

The mechanics of spinning disks require disk head movements while accessing blocks in random order. A disk loses much of its peak performance in this phase. Spinning disks reach their peak performance for sequential access; that is, blocks are read or written according to the order of their block addresses. If multiple sequential streams are served by a single disk, the disk has to perform head movements to switch between these streams and the total throughput drops. This results in a near-random-access pattern for a large number of concurrent streams.

Moving head of a spinning disk, William Warby (cc by)

SSDs

The behavior of SSDs or RAM disks differs from spinning disks. SSDs and ramdisks do not have mechanical components and are not slowed down by concurrent access. As applications usually perform I/O requests synchronously – that is, the application is blocked until a request returns – multiple I/O streams are useful for fully utilizing such a fast storage device.

MIXED-ACCESS PATTERNS

Our evaluation has shown that mixing sequential and random-access patterns on a single device results in a nonoptimal resource utilization (FN: C. Kleineweber, A. Reinefeld, T. Schütt {2014}. QoS-Aware Storage Virtualization for Cloud File Systems. Proceedings of the 1st ACM International Workshop on Programmable File Systems.). Adding a single random-access stream to an HDD that serves sequential-access streams results in a significant drop of total throughput. To simplify the device models and keep the reservation scheduler device agnostic, we also do not mix workloads on other device types.

MODELING PERFORMANCE PROFILES

Due to the complex behavior of many uncertainties – for example, caused by different cache layers or unknown software components like the local file system on a storage device – we built an empirical performance profile for each OSD that enters an XtreemFS cluster by running a benchmark that measures sequential and random throughput at different levels of concurrent access.

QUALITY OF SERVICE AND STORAGE VIRTUALIZATION

To provide users with a predictable environment that behaves similarly to a single-user system and provides constant performance on shared virtualized resources, we use reservations in terms of capacity, IO bandwidth, and desired access pattern. Using logical file-system volumes per reservation allows us to separate users into isolated file system namespaces and protect their data in the multitenant infrastructure.

The most critical part in a shared storage environment is the performance isolation that consists of appropriate reservation scheduling to find proper XtreemFS OSDs and reservation enforcement to ensure the promised performance for each reservation (FN: C. Kleineweber, A. Reinefeld, T. Schütt {2014}. QoS-Aware Storage Virtualization for Cloud File Systems. Proceedings of the 1st ACM International Workshop on Programmable File Systems.).

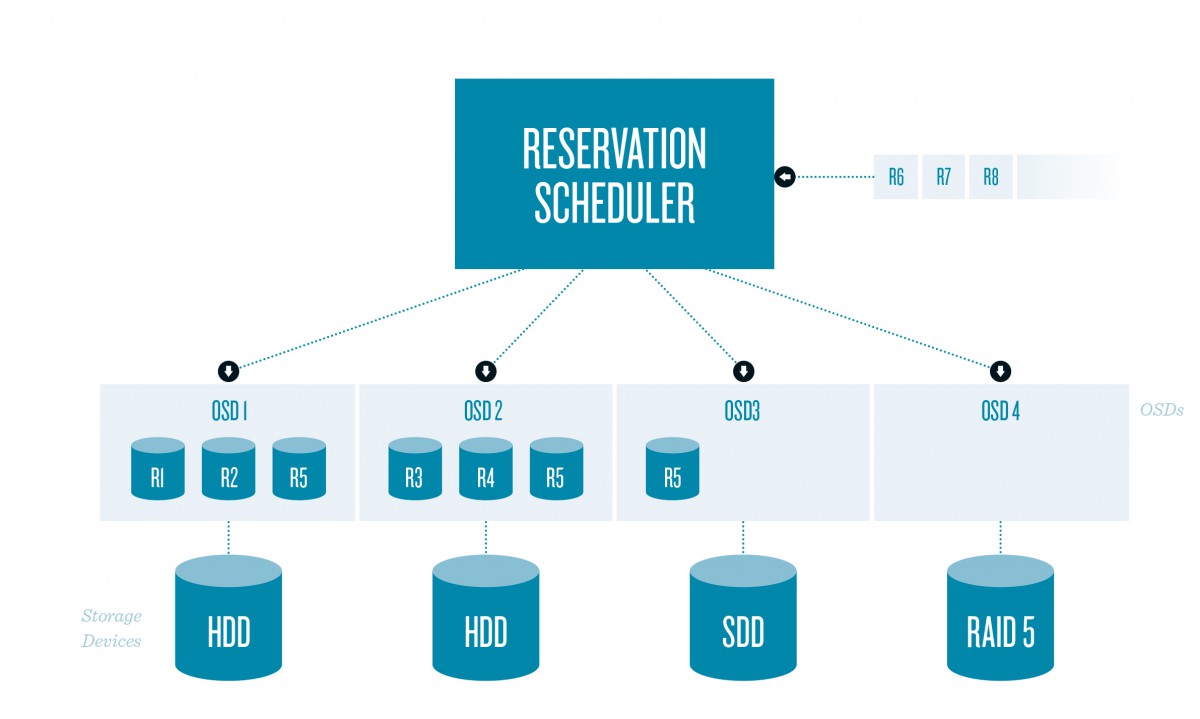

Reservation Scheduling

The capacity and performance in terms of sequential or random throughput, can be controlled by either selecting an OSD that can serve a reservation or striping a reservation over multiple OSDs that provide the necessary resources in sum.

Each OSD provides a set of resources like capacity, sequential throughput or random throughput. The available resources of an OSD depend on the already allocated resources and the number of reservations that are currently mapped to it.

Our reservation scheduler receives a stream of incoming requests that have to be scheduled to OSDs immediately (or rejected) while the lifetime of a reservation is unknown. Our scheduling algorithm aims to minimize the number of necessary OSDs while guaranteeing the requested capacity and performance.

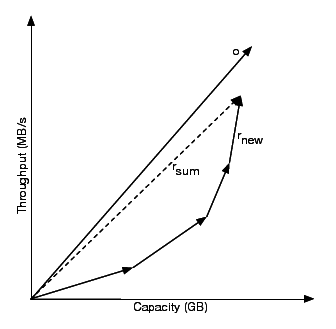

An OSD is selected by finding the minimal angle between an OSD vector o and a reservation vector rsum that equals the sum of all existing reservation vectors on the OSD and the new reservation vector rnew.

If a reservation does not fit on a single OSD, we preferably stripe the reservation over OSDs that are already in use. If that is not possible, unused OSDs are taken into consideration. To separate workload patterns, when scheduling decisions we only take OSDs into account that either are unused or have reservations with the same access pattern.

The selection of individual OSDs at each of these steps is a multidimensional bin-packing problem where each OSD and each reservation is represented by a vector. Each dimension of the vectors represents one of the resources: capacity, sequential throughput random throughput. The sum of all reservation vectors that are scheduled to an OSD must not exceed its resource vector. We make use of the heuristic Toyoda’s Algorithm [59] to find a sufficient OSD for each reservation vector. Toyoda’s Algorithm minimizes the angle between the OSD vector and the sum of all reservation vectors that are scheduled to the OSD. Thereby reservations are scheduled to OSDs that have a similar ratio between different resources – for instance, a similar ratio between sequential throughput and capacity. This strategy should eventually result in a schedule in which as few resources as possible remain free.

An OSD is selected by finding the minimal angle between an OSD vector o and a reservation vector rsum that equals the sum of all existing reservation vectors on the OSD and the new reservation vector rnew.

Reservation Enforcement

We implemented two mechanisms to enforce reservations per OSD:

- A request scheduler on each OSD that prioritizes users according to their reserved throughput.

- Quotas per reservation that ensure globally that no user can occupy more space on a volume than reserved.

The request scheduler has to ensure that the fraction of the total performance, which each reservation gets, is identical to its fraction of all throughput reservations. We implement this property by adding a weighted fair-queueing scheduler that replaces the existing request queue of the OSD.

Heterogeneous Clouds

THE EU PROJECT “HARNESS”

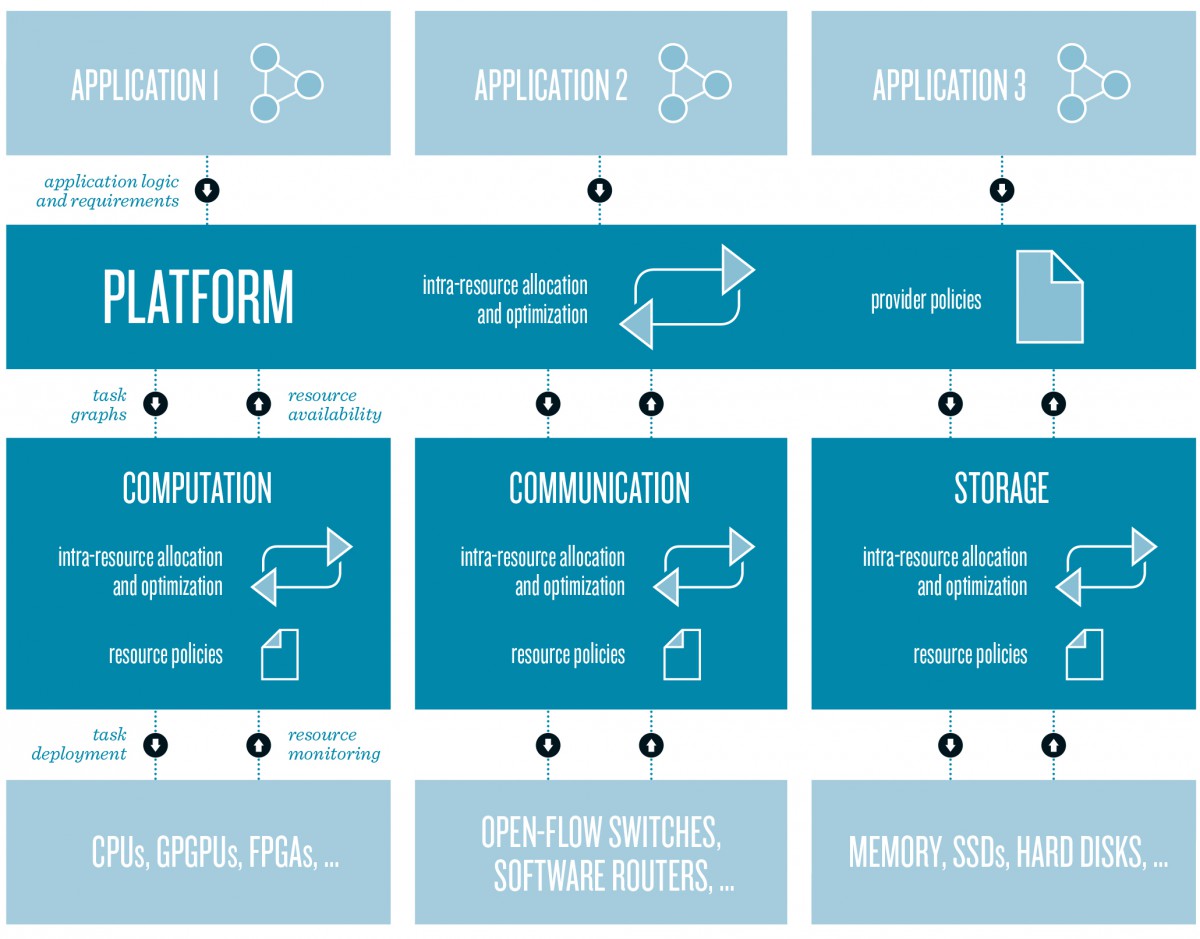

To provide performance guarantees on the application level, guaranteed performance is also required for other resources like computing resources and communication. We developed our solutions in the context of the EU project HARNESS, which has the aim of building a prototype of a platform-as-a-service (PaaS) cloud that provides performance guarantees for heterogeneous infrastructures.

Programming Model

We have seen how to use a heterogeneous storage cluster, consisting of HDDs, SSDs, or other devices, efficiently. Heterogeneous computing resources may be CPUs, GPUs, and FPGAs, for instance. Writing applications that can run on all of these architectures requires a programming model with abstractions that can be compiled to all of these target architectures. All resources that are used by an application are connected by virtual network links that can be provided by an Open Virtual Switch (Open vSwitch).

A user submits an application containing a manifest that describes possible configurations and cross-resource constraints to the HARNESS platform. The platform itself is resource agnostic and delegates the discovery and allocation of particular resources to different infrastructure resource managers. A cross-resource scheduler ensures that resource constraints like distance limits or throughput requirements between allocated resources are fulfilled. The platform layer builds application models by running a submitted application with different configurations.

Implementing Quality of Service Guarantees in Heterogeneous Clouds.

Efficient resource usage

Our prototype of a PaaS cloud stack provides quality of service guarantees for arbitrary applications on heterogeneous infrastructure with automatic application profiling. Running applications on the best-fitting devices saves resources and helps to reduce the energy consumption of a data center.