Challenges from Two Interdisciplinary Research Projects

How to chop and distribute hundreds of continuous high-bandwidth data streams efficiently in a network so that, for each time frame, the sensor data is collected in a particular analysis node? How to exploit modern network capabilities, like remote direct memory access, to improve the scalability of SAT solving on supercomputers? How to effectively test shared-memory parallel programs for concurrency bugs due to data races? These are three exemplary research questions we are currently tackling in two interdisciplinary research projects in the fields of high-energy physics and the automotive industry.

Highly Parallel Software Verification

Embedded software in modern cars enables new opportunities regarding the passengers’ safety and comfort, but it simultaneously presents great challenges for the automotive industry. The software has to guarantee high security standards. Unfortunately, the complexity of the verification and testing grows exponentially with the size of the software. Hence, parallel methods are needed. In the BMBF project Highly Parallel Software Verification (HPSV), partners from the automotive industry work together with researchers from Christian-Albrechts-Universität Kiel and ZIB. We are developing a high-performance software infrastructure to accelerate the verification and testing process, tackle more complex problems, and also make the deployment of new and more complex features possible.

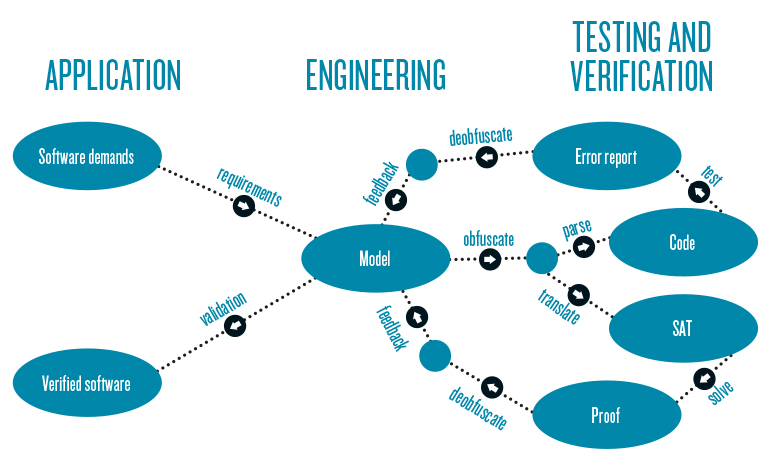

In the software-engineering process, statechart models are created according to predefined requirements. The model formally describes the behavior of the software and can be directly parsed into source code or translated into SAT formulas. Combined with formal requirements, the correctness of the model can be verified. Two alternative model representations are used to expose potential faults in the modeling process: critical concurrency issues can be found in the source code and SAT formulas can be used to prove that the predefined requirements are satisfied by the model. Both methods are completely automated and thereby give the engineers precise feedback with concurrency error reports and detailed proof pointing out where inconsistencies may remain in the model. To allow testing and verification as a service model in this highly competitive field, the models are obfuscated during the testing and verification process. The resulting feedback is then deobfuscated to make it interpretable in the automotive industry.

Automatic Testing of Concurrent Programs

Concurrent applications are particularly hard to debug. Since multiple threads access the same memory locations, data races can lead to unintended program behavior and requirement violations. Data races may occur with multiple unordered accesses to the same memory location by two or more threads, where at least one modifies the memory state. We developed Actul, a compile-time tool that detects data races. It checks data races for their harmfulness (i.e. whether they may cause a program violation).

Compile-Time Instrumentation

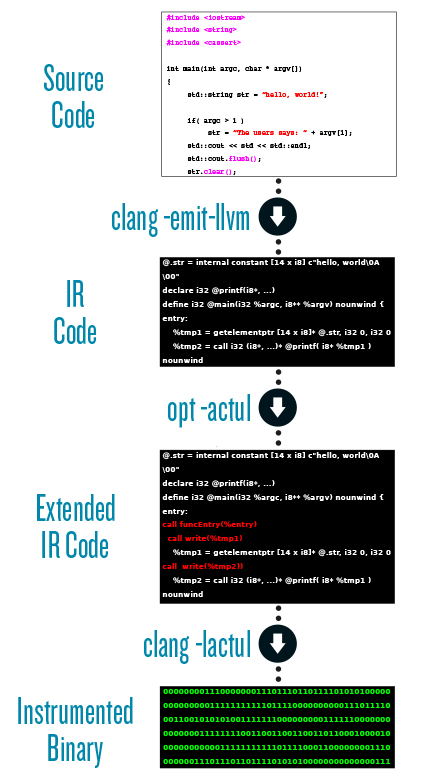

Actul is based on the LLVM compiler infrastructure. Source code is translated into the intermediate representation language (IR) of LLVM. Then each memory- and concurrency-relevant instruction in the IR code is modified to allow Actul to monitor all memory accesses and to control the thread execution order. Thus, the OS scheduler is overwritten by a user-level, deterministic scheduler, which records and replays executions and can reorder events in a subsequent execution. The instrumented IR code is compiled and linked against the Actul runtime library, which provides the functionalities of the scheduler and the test environment. Executing the resulting binary starts the testing, where Actul automatically starts and analyzes multiple test cases in parallel.

Instrumentation workflow of Actul using the LLVM compiler infrastructure and the Clang compiler.

Classifying Data Races

Data-race detectors often report false positives (i.e. potential data races that never happen in practice because of user-defined synchronizations or implicit orderings in the program workflow). Actul excludes false positives by replaying data races in additional test runs, with a different access order. For further analysis, the reorderable data races are permuted with other such data-races. Based on these test runs, each data-race access order is correlated with appearing program violations. Therefore, harmful data races can be identified as a root cause of a violation without the need to check each false positive or benign data race manually, as typically would be necessary with other tools.

Example error report of Actul’s concurrency analysis for one benign and one harmful data race.

Faster Debugging Process

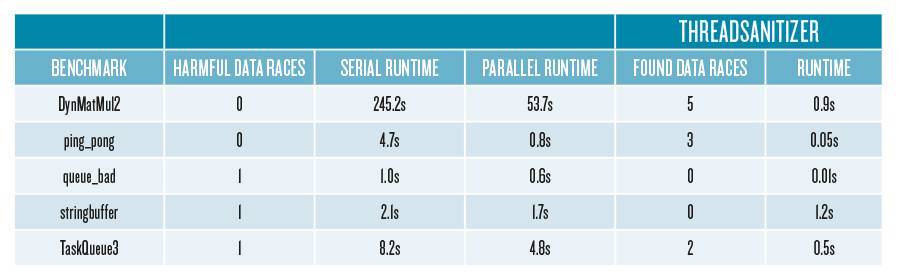

Runtime analysis of Actul and LLVM-ThreadSanitizer on an Intel Xeon Phi 7250 CPU: 68 cores, 1.4 GHz. The harmful data race found by Actul are the only harmful races in the benchmark. The parallel runtime is generated by running independent tests in parallel on one node.

Runtime analysis of Actul and LLVM-ThreadSanitizer on an Intel Xeon Phi 7250 CPU: 68 cores, 1.4 GHz. The harmful data race found by Actul are the only harmful races in the benchmark. The parallel runtime is generated by running independent tests in parallel on one node.

Scaling with Unstructured Asynchronous Communication

SAT Solving in Context of Model Validation

he Boolean satisfiability problem (SAT) consists of literals and clauses. In our context, different literals describe different states of components of a model (e.g. the current speed of a car). A clause describes a group of these components that are connected with each other (e.g. the interaction between the regulation yield by a cruise control and the actual speed of the car). All clauses represent the whole model with all connections representing the requirements of the system (e.g. at some point, the speed should match the specification of the cruise control).

Finding SAT solutions is NP complete. The search space of a valid assignment is reduced with the conflict-driven-clause-learning approach (cdcl). While checking different assignments, either a valid assignment of all literals is found or we get a contradiction with this assignment leading to a reduction of the search space by introducing new clauses. These clauses are the key to finding a solution to the problem. With such assignments, we can either globally prove the correctness of the model or its unsatisfiability, which indicates system defects. These system defects can then be reported back to correct the system (e.g. the maximum speed is less than the specification of the cruise control and cannot be reached).

Scaling SAT

Each solver learns its own clauses to reduce its search space. Exchanging these clauses will help other solvers to improve. While scaling up, the exchange of too many clauses is not necessarily beneficial because a huge amount of possible clauses can reduce the effectiveness of each solver due to handling too much data. Therefore, we need an effective and flexible way to exchange clauses based on an offer–select scheme.

RDMA Communication Approach

Remote direct memory access (RDMA) offers an efficient way to asynchronously distribute packages of learned clauses to a set of solvers. First, each solver offers such a package to other solvers, including a scoring value. Each solver selects a limited number of these packages based on the scoring value, retrieves them, and informs the offerers when done.

RDMA allows us to establish this unstructured, asynchronous communication pattern. Depending on the system size, network capabilities, and state of the problem-solving process, we can adjust the number of offers that each solver distributes and the number of offers each solver then actually takes.

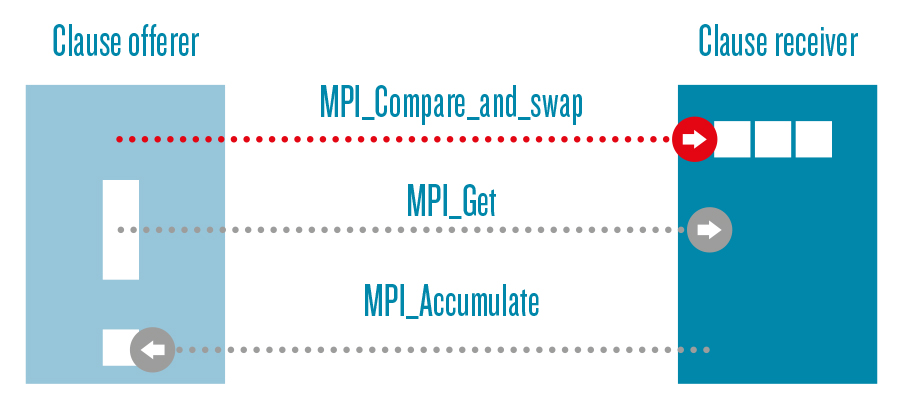

Offering data (MPI_Compare_and_swap) with situational receiving (MPI_Get) and finishing data transfer (MPI_Accumulate) via RMA.

Offering data (MPI_Compare_and_swap) with situational receiving (MPI_Get) and finishing data transfer (MPI_Accumulate) via RMA.

Clause selection via scoring scheme

Clauses have different characteristic values (e.g. literal block distance (LBD)) indicating under what circumstances the clause is generated. Thus, we can distinguish between different types of clauses. A scoring scheme can now rate the packages of clauses depending on the scoring of a package. The decision of fetching or declining a package depends on this score comparing it to all other scores of potential packages. So, we can select the best subset of packages for fetching to reduce the amount of clauses exchanged.

Results and future work

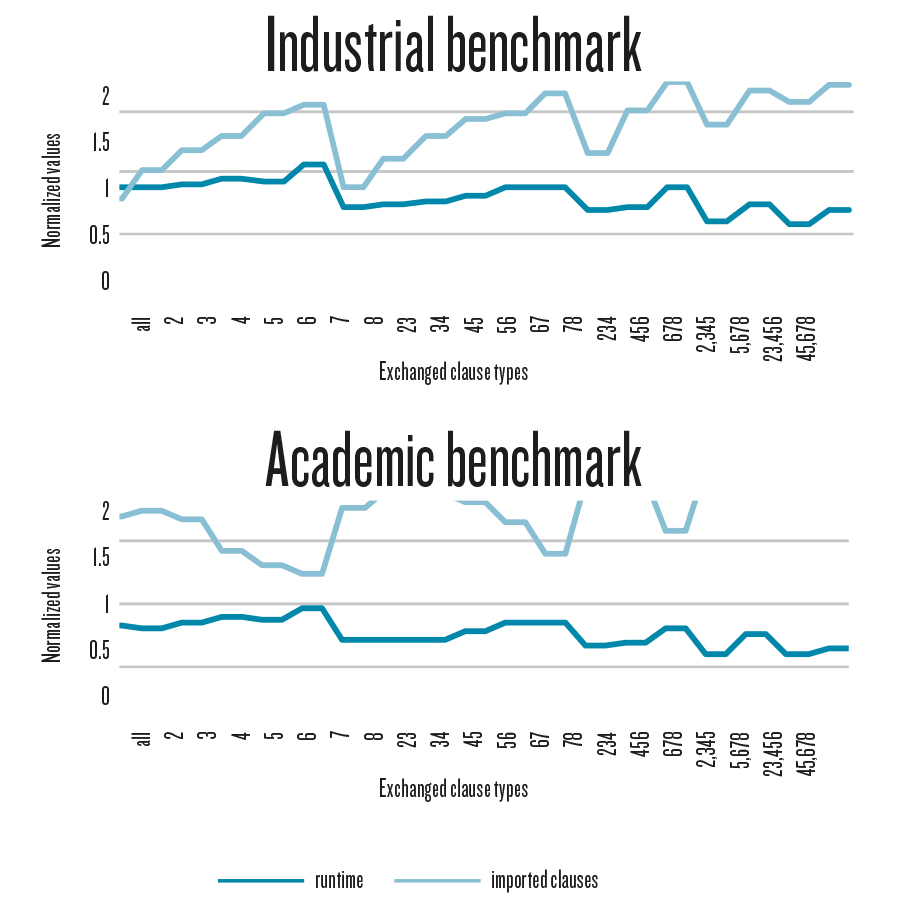

The type of clauses greatly influences the overall solution time. When scaling the problem to parallel systems, only those clauses that help reducing the runtime should be distributed among the processes. We will further study the effect of different heuristic clause distributions on the overall runtime.

ccumulated runtime characteristic correlated with the accumulated amount of exchanged clauses for different combinations of clause types for two different problem classes of problems from the SAT competition 2013

ccumulated runtime characteristic correlated with the accumulated amount of exchanged clauses for different combinations of clause types for two different problem classes of problems from the SAT competition 2013

Scaling High-Bandwidth Data Streams

Compressed-Baryonic-Matter Experiment

The compressed-baryonic-matter (CBM) experiment at the Facility for Antiproton and Ion Research (FAIR) in Darmstadt aims to explore the quantum-chromodynamics (QCD) phase diagram in the region of high baryon densities using high-energy nucleus–nucleus collisions. Many sensors surround the experiment to collect data during beam time. The overall data rate from the sensors is expected to exceed 1 TB/s (1,000,000,000,000 bytes per second).

The CBM data streams from about 600 input channels have to be spread and grouped to form views of time intervals. The grouped data is analyzed to detect and discover different phenomena. Approximately 600 nodes will be used to spread and group data streams. This is done by the First-Level Event Selector (FLES), a high-performance compute cluster. The software to build time slices for the analysis, called FLESnet, must be scalable, highly available, and fault-tolerant.

FLESnet Architecture

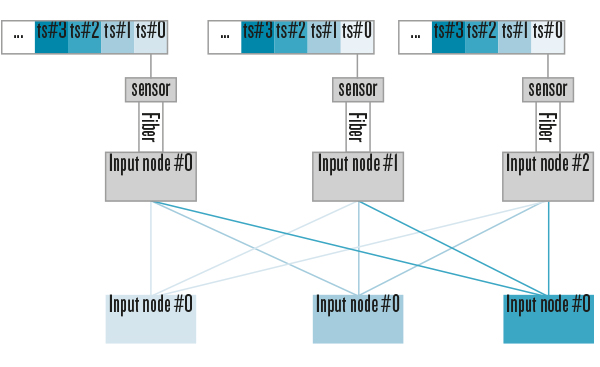

The high-level design of FLESnet consists of two kinds of processes (called nodes in the following): input and compute nodes. Input nodes receive data from the sensor links via a custom FPGA-based input interface and chop the stream into time intervals, called micro time slices, before distributing them for time-slice building. Compute nodes receive micro time slices of a corresponding time interval from all input nodes to form complete time slices before analyzing them further.

Each compute node reserves one buffer in its memory for each input node. Input nodes use remote direct memory access (RDMA) to write their contribution directly to the compute nodes' memory and inform them accordingly. Before overwriting the same memory address in the buffer, input nodes wait for an acknowledgement from the compute node that the time slice was processed and the buffer is free for reuse.

FLESnet Challenges

FLESnet faces several challenges in its design:

- Portability: FLESnet was implemented using the API of InfiniBand Verbs and relied on connection-oriented communication. To also exploit other modern interconnects, we ported FLESnet to the open-source framework OpenFabrics Interface (OFI) Libfabric. This widens the spectrum of clusters FLESnet can run on and keeps future decisions on the actual computer and networking hardware flexible.

- Synchronization: Although each input node can fill its buffer space at each compute node, buffer space cannot be reused until contributions from all input nodes are received to complete the time slice and the analysis is finished. Therefore, a single lazy node or slow connection would cause input nodes to be out of synchronization and slow down the entire cluster.

- Scalability: Due to the limited buffer space, each compute node should receive the contributions from all input nodes for a particular time slice in a small time frame. As a result, input nodes should send their data coordinated to not overload individual network links or become blocked due to full buffers at compute nodes. On the other hand, FLESnet's aggregate bandwidth should scale linearly with the number of nodes.

- Monitoring, scheduling, and fault tolerance: FLESnet has to avoid network congestion, node failures, and load imbalances on heterogeneous hardware. It must be able to tolerate node and link failures because the beam time is costly and each physics experiment is unique and cannot be repeated.

Distributed Deterministic Scheduler

To address the outlined challenges, FLESnet is supposed to transmit data in high throughput and low latency. It should minimize the time gap between the first and last delivered contributions of each time slice to compute nodes. FLESnet should not congest a single node or link at a time or leave other nodes or links idle. Our distributed deterministic scheduler (DDS) synchronizes the input nodes to send their contributions within a particular time interval. Furthermore, it attempts to eliminate the end-point congestion at compute nodes. To do so, DDS proposes a start time and duration for each interval of time slice distribution that input nodes should follow. DDS consists of two schedulers, one at the input nodes and another at the compute nodes.

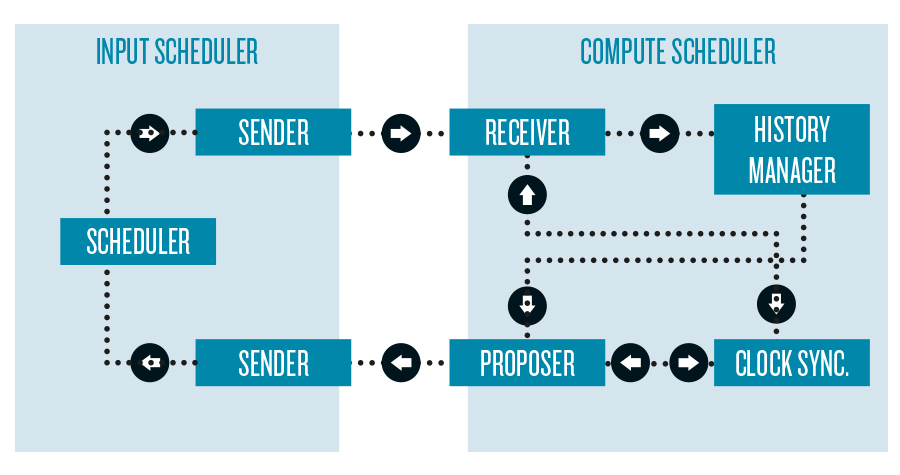

Compute Scheduler

The compute scheduler stores the history of the transmitted intervals and proposes the needed information for the upcoming intervals. It consists of four modules: receiver, history manager, proposer, and clock synchronizer. The receiver module collects the actual starting times and durations from input schedulers. After that, it sends them to the history-man-a-g-er module to calculate statistics and storage. The proposer module calculates the expected starting time and duration of up coming intervals based on the statistics from the history manager and sends it to input schedulers. The clock-synchronizer module considers different clock drifts between different machines for higher accuracy.

Input Scheduler

The input scheduler receives the proposed starting time and duration of an interval, and then schedules the transmission accordingly. It consists of three modules: sender, receiver, and scheduler. The sender module updates the transmitted messages to compute nodes and includes the actual starting time and duration of the completed interval. The receiver module receives the proposed interval information from the compute scheduler. The scheduler module sched-ules the transmission according to the receiver information from the receiver module. It informs the sender module once a complete interval is transmitted.

Results

Synchronization Overhead

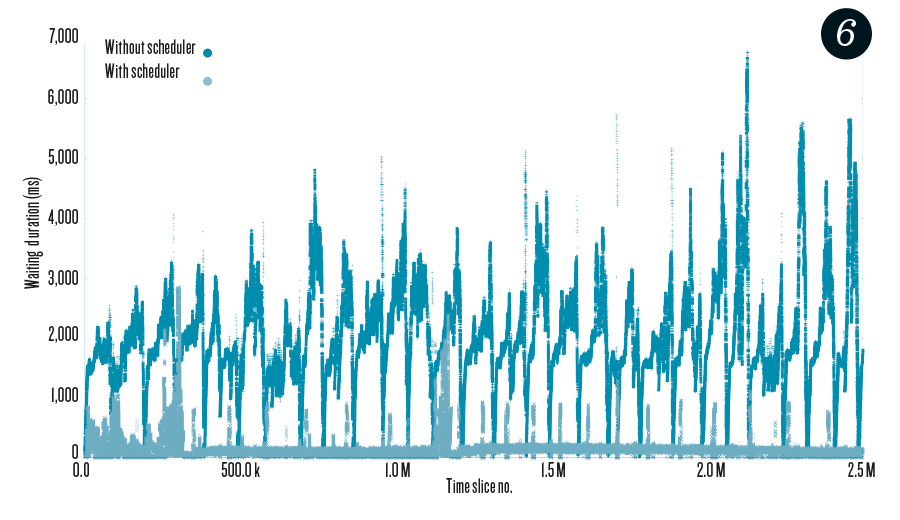

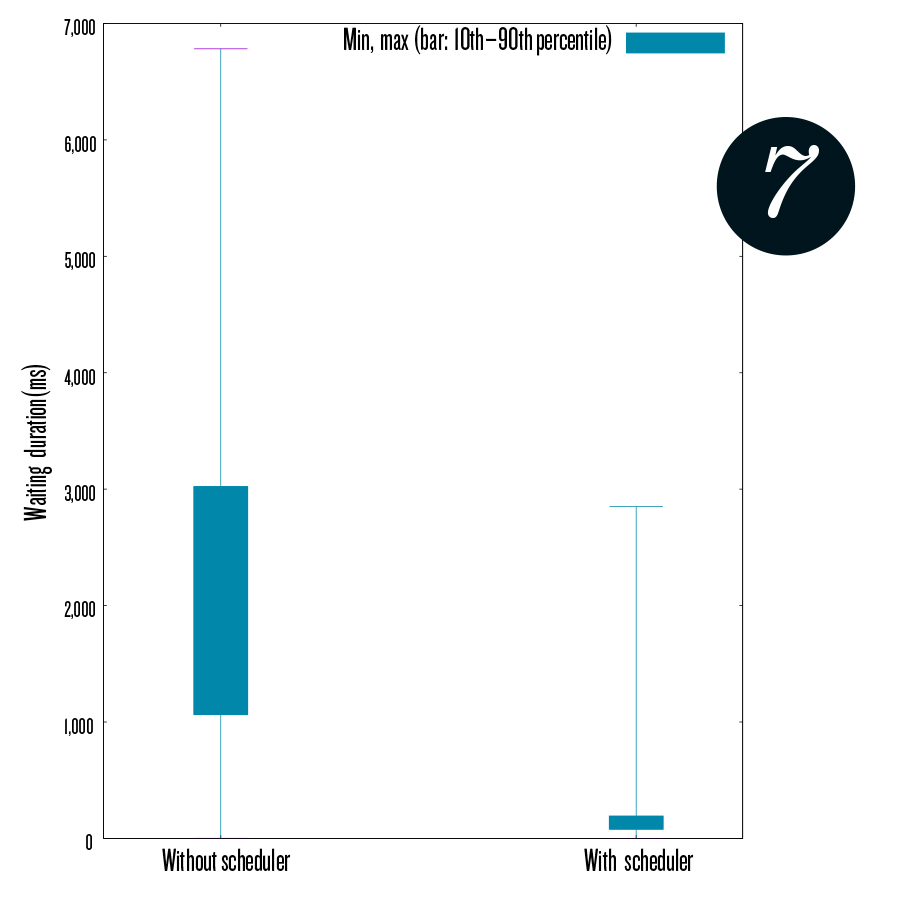

DDS minimizes the duration needed for a compute node to receive a complete time slice. Figure 6 depicts the time gap between the first and last arrival of 2.5 million time slices with/without our scheduler on 192 nodes, 96 input nodes, and 96 compute nodes. This figure shows how the DDS helped in minimizing the duration needed to receive a complete time slice.

Figure 7 depicts the variance with and without the scheduler compared with the minimum and maximum waiting duration of each. It shows that 90% of the needed waiting duration is exceptionally small when DDS is used.

Scalability

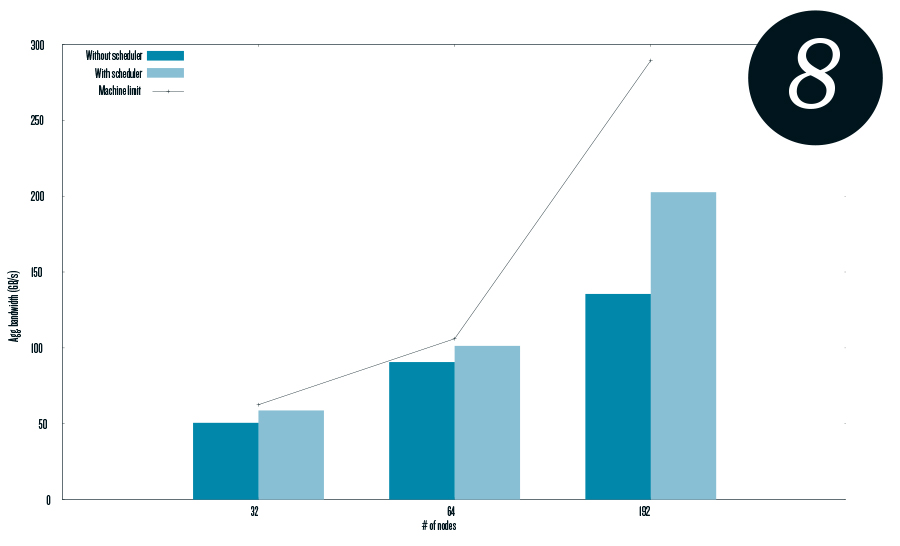

FLESnet. Figure 8 shows how the aggregated bandwidth is enhanced using DDS compared with no scheduler.

Future Work

We plan to further enhance the scheduler to achieve an aggregated bandwidth as close as possible to the machine limit. Furthermore, we aim to design and implement a fault-tolerance mechanism so that FLESnet can tolerate faults in nodes and the underlying network.