This project is part of the collaborative research project "Sensorimotor Processing, Decision Making, and Internal States: Towards a Realistic Multiscale Circuit Model of the Larval Zebrafish Brain”, performed by scientists from neuroscience and computer science, headed by Florian Engert. The overall goal is to create a realistic multiscale circuit model of the larval zebrafish brain – from the nanoscale at the synaptic level, to the microscale describing local circuits, to the macroscale brain-wide activity patterns distributed across many regions.

The whole project is divided into 3 projects

Project 1 “Atlas” (Lead: Jeff Lichtman): Construct an online brain atlas for the larval zebrafish at unprecedented scale. The project is divided into three major parts: “Macro” for annotation and 3D visualization of gross brain anatomy, “Micro” for description of network architecture at cellular resolution, and “Nano” for Integration of ultrastructural information or EM volumes.

Project 2: “Behavior and Imaging” (Lead: Florian Engert): The project aims to implement, execute and analyze series of behavioral experiments, involving brain-wide imaging and interrogation of neural circuits in tethered and freely swimming animals.

Project 3 “Modeling and Theory” (Lead: Haim Sompolinski): Quantitative brain-wide circuit modeling of zebrafish larva, guiding further validation, refinement and hypothesis testing experiments. It includes behaviour models, conceptual and functional circuit models.

and 5 core modules, including Administration, Engineering, Electron Microscopy, Live Imaging and

Data Science (Lead: Joshua Vogelstein): Build and deploy a cloud-based data management system to store, manage, curate, and visualize all measured and derived image data. Design and develop data processing pipelines to process behavioral and imaging data from Project 1. Facilitate reproducible and extensible modeling (Project 3) for zebrafish and other model systems.

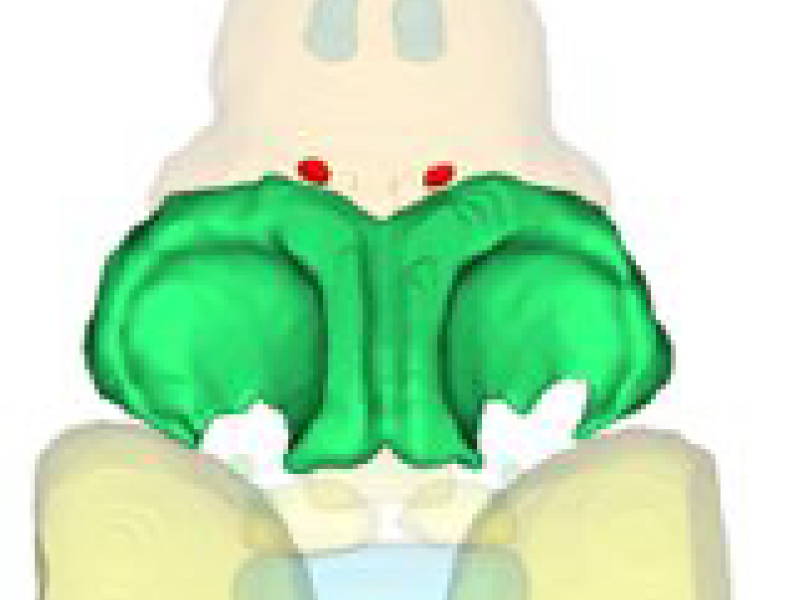

One central part of the project is the creation and visualization of a multiscale brain atlas that includes relevant anatomical structures and neural circuits. It will rely on microscopic data regarding anatomy reconstruction and on experimental data (electrophysiological, …) regarding reconstruction of functional circuits. The atlas will not be static but shall grow with availability of new data. Therefore, data acquisition, data preprocessing and data integration (image registration, segmentation and structure identification) and data management are important tasks, conducted at John Hopkins U.. Our task is to provide reconstruction of anatomical structures from probabilistic segmentations and to develop a user interface that allows researchers to perform analyses with extensive visual support. A challenge will be to create a system that scales up to the large amount of data to be processed as well as to multiple users.

The subproject conducted at ZIB deals mainly with construction and visualization of the brain atlas, in close collaboration with projects 1, 3 and the data science core. Specifically, our work will include the following tasks:

Atlas construction (hierarchical geometries with semantics)

- Building on image segmentations (provided by project partners), create separating surfaces between biological structures. R&D task: Develop solutions to deal with segmentation uncertainty, biological variability and non-unique semantics.

- Representing the biological hierarchy, based on an agreed ontology and annotated image data. R&D task: Develop suitable data structures that can be easily updated when new anatomical or semantic information becomes available.

- Dealing with the multiscale character of the data. R&D task: Develop level-of-detail representations to reduce the computational expense.

- Integration of functional circuit models. R&D task: Generate circuit models based on anatomical data, provide interface with simulator code, analyze simulations and compare to functional measurements.

Atlas visualization, exploration, digital experiments

- Utilizing multiscale representations for interactive rendering. R&D task: Develop techniques for seamless visual transitions between representations of different levels of detail.

- Ontology-based navigation and visualization. R&D task: Develop user-centered techniques for fast navigation with and without detailed anatomical knowledge.

- Provision of automated, concatenable tools for analyzing data in the atlas, controlled by editable scripts. R&D task: Develop a set of combinable analysis tools that perform script-controlled data analyses.

The atlas has three major functions: It serves as a framework for spatially organized storage of data, as a means for exploring these data, and as a means to redistribute the data for the community in a readily accessible and informative way. The atlas shall become part of a cloud-based eco-system, providing “Neuroscience as a Service” (NaaS), allowing users to integrate and browse data as well as to run models in a desktop and notebook environment.

If you are interested to get a glimpse of the current status, look at this movie; you can reproduce the actions shown in the movie by downloading a preliminary version of this software and using the data given below.

Software

As a starting point of our development, we will use Neuroglancer, a WebGL-based viewer for volumetric image data, 3D meshes and skeletons. It is purely a client side program,written in TypeScript. We will extend this viewer with new modules according to the project requirements. Developers can download the latest version of the source code here.

3D Data

Zebrafish brain regions, created within the Harvard ZBrain project and converted by us to 3D meshes, can be downloaded here.

Additional Material

Below is a short video of the current system showing the anatomically correct zebrafish brain regions in 3D using neuroglancer.

Funding

This work is funded by the National Institutes of Health (NIH), Grant Award Number 1U19NS104653-01 REVISED; FAIN# U19NSI04653; CFDA# 93.853.