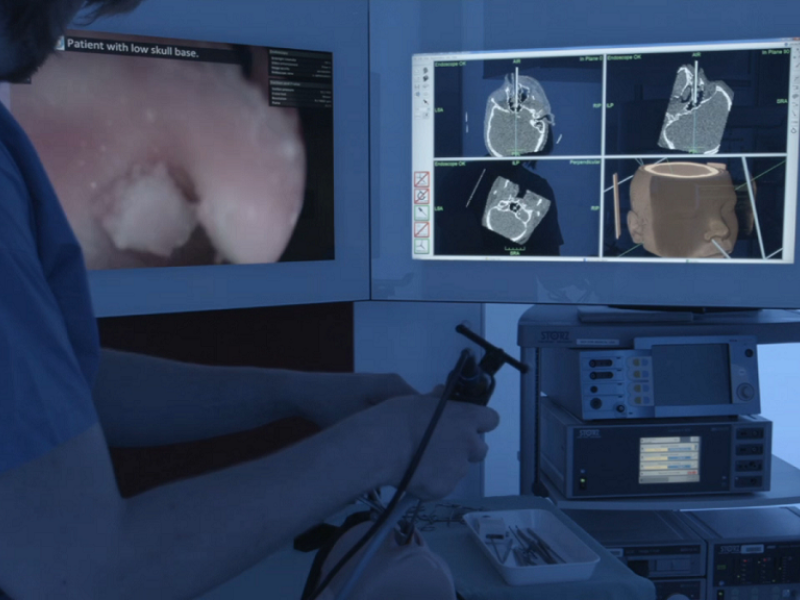

With advancement in surgical techniques, modern procedures are driven more towards minimally invasive surgeries (MIS). A surgical camera (coupled with an endoscope) and several thin instruments provides surgeon the ability to access a region inside the human body by making small incisions or navigating through natural orifices. However, it also introduces challenges such as the limited view of the surgical site, rotating images and impaired hand-eye coordination. Therefore, surgical guidance and navigation assistance tools are necessary to provide contextual support to the surgeons during the surgical procedure. The aim of the COMPASS project is to develop an intelligent and cooperative assistance system that recognizes the cognitive navigation process of a surgeon and supports him/her proactively and by means of comprehensive and immersive visualization as well as interaction in his navigation task during the surgical procedure.

Fig. 1 Endonasal endoscopic surgery: Hand-Eye Coordination

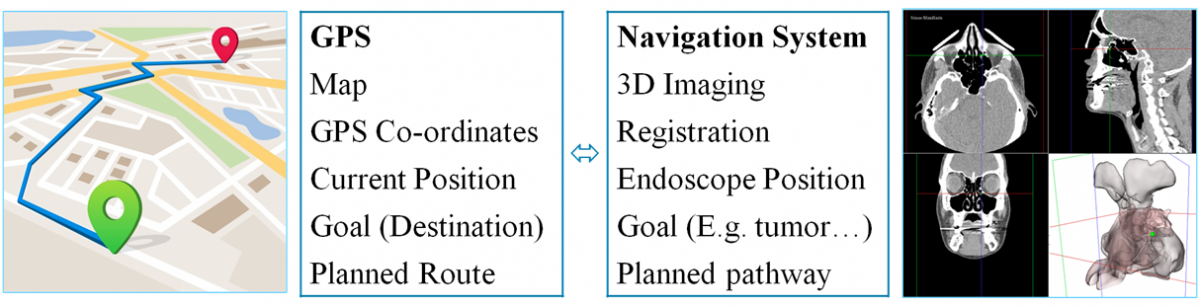

Similar to a GPS navigation system, an anatomical map of the patient will be created from streo-endoscopic camera and the surgeon will navigate inside the patient's body with a constantly adapting map, where the current position is displayed. The information about the surgical activites, characteristic anatomical landmarks and navigation hints will be seasmlessly integrated to the integrated OR display.

A central focus of the project is on research and development of prediction functions of surgical navigation process such as cancer tissue detection, surgical activities recognition or anatomical landmarks or structure classification using the multimodal stereo-endoscopic and sensor information.

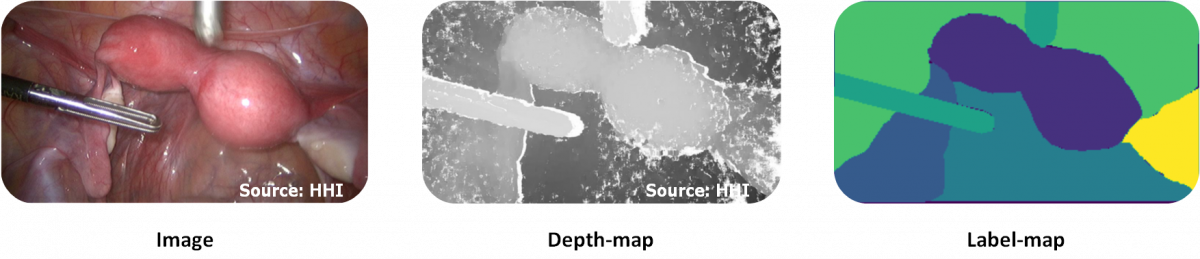

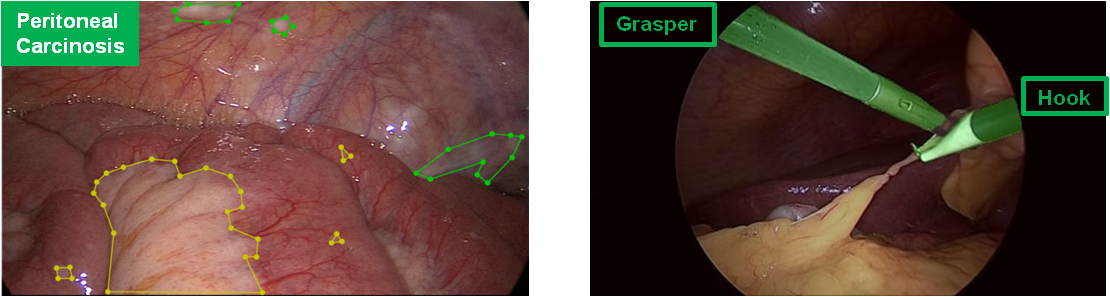

Recognize visual attributes

Identifying visual attributes (e.g. instrument [1,3], pathology) is fundamental for deriving context-aware cues from the endoscopic video stream.

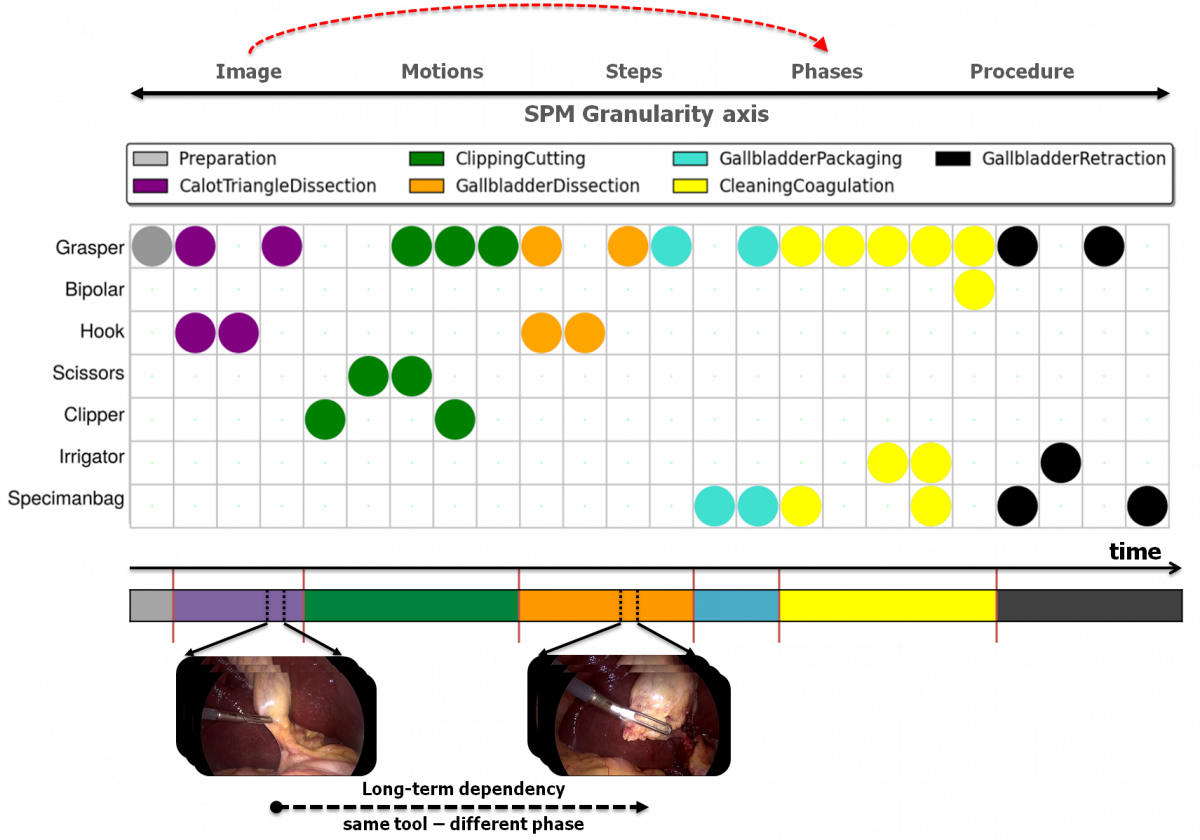

Surgical activity recognition

Recognition of surgical activities [2] or events enables the automated understanding of the progress of the surgery.

Surgical Navigation Assistance

Improved navigation assistance can be provided by coupling tracking devices with image-based localisation of the landmark structures.

References

[1] M. Sahu, A. Szengel, A. Mukhopadhyay, S. Zachow. Simulation-to-Real domain adaptation with teacher-student learning for endoscopic instrument segmentation. International journal of computer assisted radiology and surgery, 2021.

[2] M. Sahu, A. Szengel, A. Mukhopadhyay, S. Zachow. Surgical phase recognition by learning phase transitions. Current Directions in Biomedical Engineering, Vol. 6, No. 1, 2020.

[3] M. Sahu, R. Strömsdörfer, A. Mukhopadhyay, S. Zachow. Endo-Sim2Real: Consistency Learning-Based Domain Adaptation for Instrument Segmentation. In: Medical Image Computing and Computer Assisted Intervention. Vol. 12263, pp. 784 - 794, 2020.