Within the BiOPAss (Bild, Ontologie und Prozessgestützte Assistenz für die minimal-invasive endoskopische Chirurgie) project, a novel marker-free image-based navigation approach will be developed, which would support the endoscopist in identifying the anatomical position of the endoscope within the lumina of the human body. Together with clinical domain experts, research groups at Leizpig University and TU Munich, and companies; concepts, data structures, and efficient algorithms will be developed that allow a match between image features of newly acquired with previously stored endoscopic image sequences. In combination with process information and dedicated ontologies, positional hints will be visualized graphically within an anatomical reference model to provide a navigational aid to the surgeon.

Introduction

Currently, endoscopy is the most widely used technique for diagnosis and treatment of gastro-intestinal tract and sinusitis related diseases. However, surgeon face challenges in endoscope navigation during the procedure due to limited field of view (FOV) of the operating area, rotating images and impaired hand-eye coordination. Experienced endoscopic surgeons are able to mentally assign an anatomical position of the endoscope to the endoscopic image, however, novice surgeons often face challenges in determining the anatomical position and orientation of the endoscope from the endoscopic images displayed on the monitor.

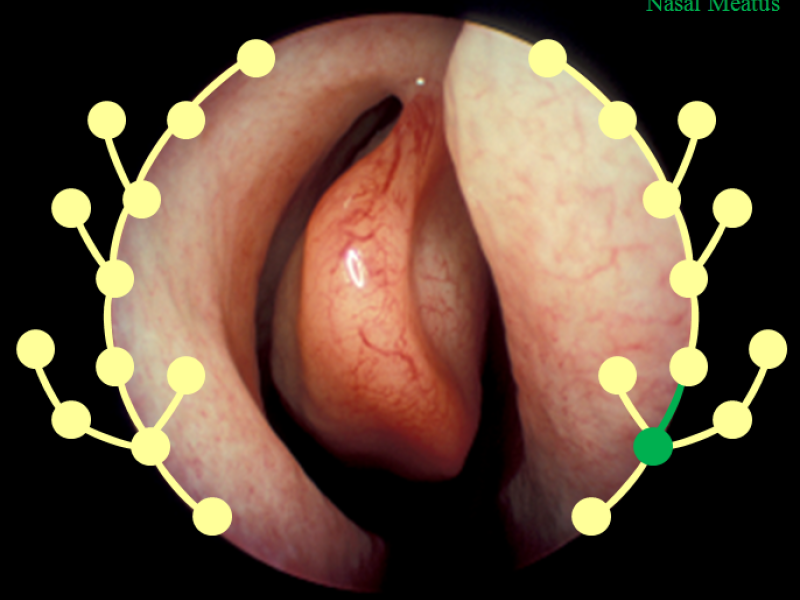

Fig. 1 Endonasal endoscopic surgery

To overcome these issue, optical tracking (OPT) or electro-magniectic tracking (EMT) devices have been introduced to the operating room (OR), however they either demenad direct line of sight requirements or susecptible to errors due to ferro-magnetic material apart from increased technical complexity in the usually crowded OR.

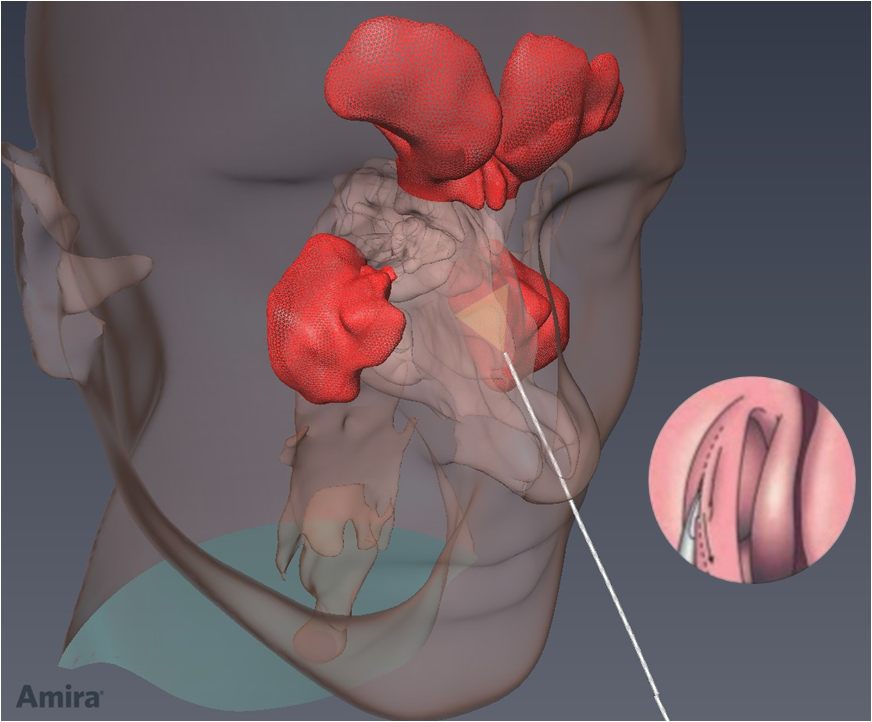

Fig. 2 Endoscope tracking using OT (in red)

With the aim of reducing technical complexity of the OR, we support the endoscopist in identifying the anatomical position of the endoscope within the lumina of the human body by annotating real-time image frames with a label in the training database which is having similar appearance and annotated by the expert surgeons previously.

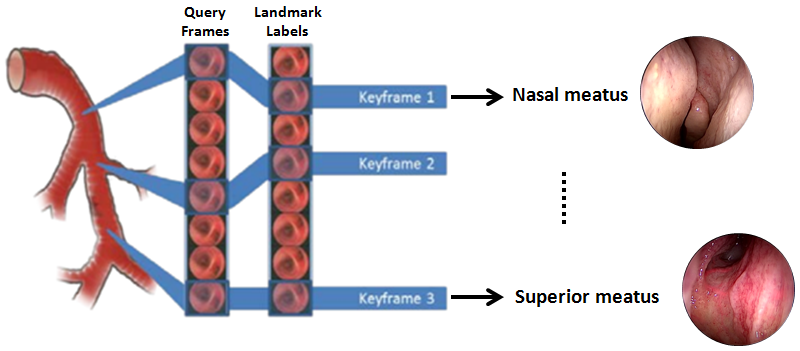

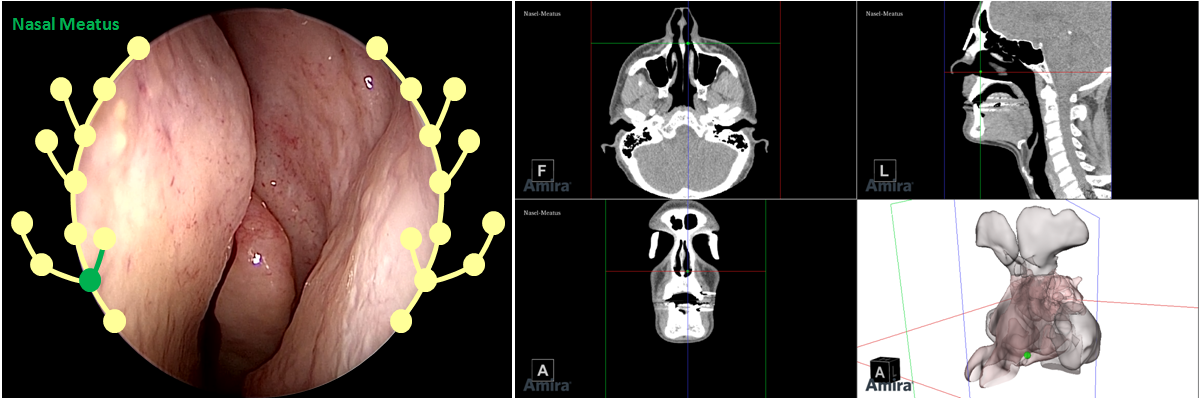

Fig. 3 Real-time query frame is mapped to the corresponding label in the database, and visualized w.r.t CT image of the landmark.

Scene Recognition Pipeline

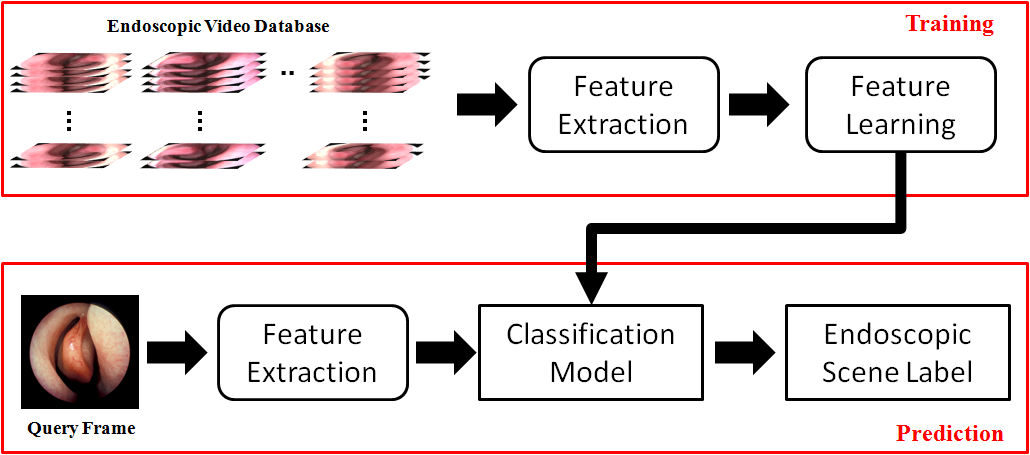

To achieve the goal, we select a limited set of representative images from expert annotated endoscopic videos; extract informative image features; and create a classification model by learning these image features, which in turn generate corresponding annotation for real-time query image frames.

Fig. 4 Training and testing stages of the scene recognition pipeline

Visualization

The operator is provided with a roadmap, consisting of images of the nasal divisions along the route. When the operator approaches a division in the airways, the system shows which branch to follow.

Fig. 4 Current position and navigation graphical hints are overlaid on the endoscopic video and visualized within an anatomical reference model and CT.