The 3D geometry of anatomical structures facilitates computer-assisted diagnosis and therapy planning. Medical image data provides the basis for reconstructions of such geometries. The first challenge of automatic shape reconstruction is the wide variety of anatomical structures in terms of size, shape, topology, and degree of pathology. The second challenge comes from the limitations of the imaging process. Measurement errors may include artifacts from foreign objects, movement artifacts, noise, low contrast. These measurement errors make the segmentation an inherently ill-posed problem.

CNNs, specificly the UNet and its variants, have achieved state of the art performance on a large number of segmentation tasks. However, they rely on a large set of annotated images for training. This requirement becomes more critical when the image data is imperfect, containing artefacts, low contrast, unspecific boundary appearance, etc. In these cases, slight deviations from the training data will result in a performance drop, resulting in a low outlier robustness. Furthermore, classical UNets in general do not provide any tolopogical guarantees, e.g. the number of connected components or holes in the anatomical structure of interest. The goal of this project is to mitigate some of these limitations through incorporation of prior shape knowledge.

Example segmentations

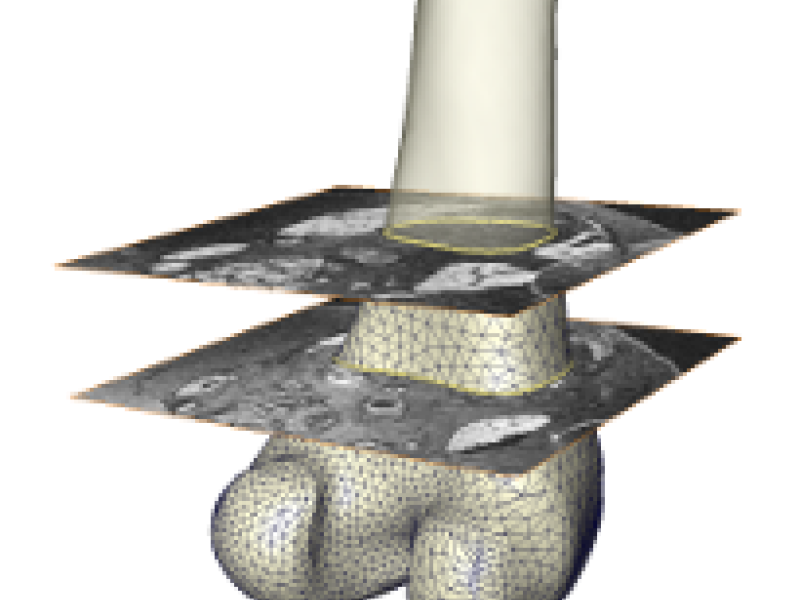

Clinical Motivation: Shape Transfer between Modalities

Various imaging modalities are able to depict various anatomical structures. For example, a CT scanner depicts bony structures particularly well, while an MRI scanner can resolve soft tissue with higher detail, compared to a CT scanner. However, from the clinical perspective it is often useful to attain a plausible reconstruction of a bony structure from an MRI image, even though the detailed information in the image might be partially missing, for example due to a lack of contrast. In order to train a CNN for such a segmentation task, a large pool of manually annotated images would be required, which is particularly tedious to attain due to the missing details in the image. Providing annotations for a CT scan, however, is much easier, since the structure is well depicted. Therefore, it is desirable to extract shape knowledge from a set of segmentation masks from CT scans, and to employ this shape knowledge to improve the segmentation of MRI scans, where the image information is locally uninformative. In addition, it is important to provide uncertainty estimates of the predictions. These would allow the clinician to distinguish parts of the segmentation that are reliable, from parts which have been estimated from a prior shape knowledge, instead of the underlying image.

Shape Priors in Medical Imaging

Prior to the rise of CNNs, so-called model-based segmentation methods made use of statistical shape models (SSMs) to enforce anatomical plausibility of predicted shapes. An SSM is built from a set of training shapes, represented as triangular meshes, which are assumed to be in correspondence to each other w.r.t. prominent geometrical features. The vertex coordinates are approximated with a normal distribution, from which the main modes of variation in shape are extracted through principle component analysis. While SSMs provide robust priors, they suffer from two shortcomings -- first, the corresponding meshes are tedious to create, and second, they tend to have a limited model capacity, that is, they struggle to accurately represent new shapes with the level of detail required for segmentation.

Recent developments in CNNs offer new ways to learn shape priors from examples. In contrast to SSMs, CNNs can process segmentation masks without the need to create corresponding triangular meshes. Furthermore, the CNN-based shape priors naturally integrate with existing CNN-based segmentation pipelines, such as the UNet. Therefore, our focus lies on exploring novel ways of learning shape knowledge with CNNs, and embedding them into image-based segmentation methods.